Statistics and Machine Learning

# import libraries

import matplotlib.pyplot as plt

import numpy as np

import scipy.stats as stats

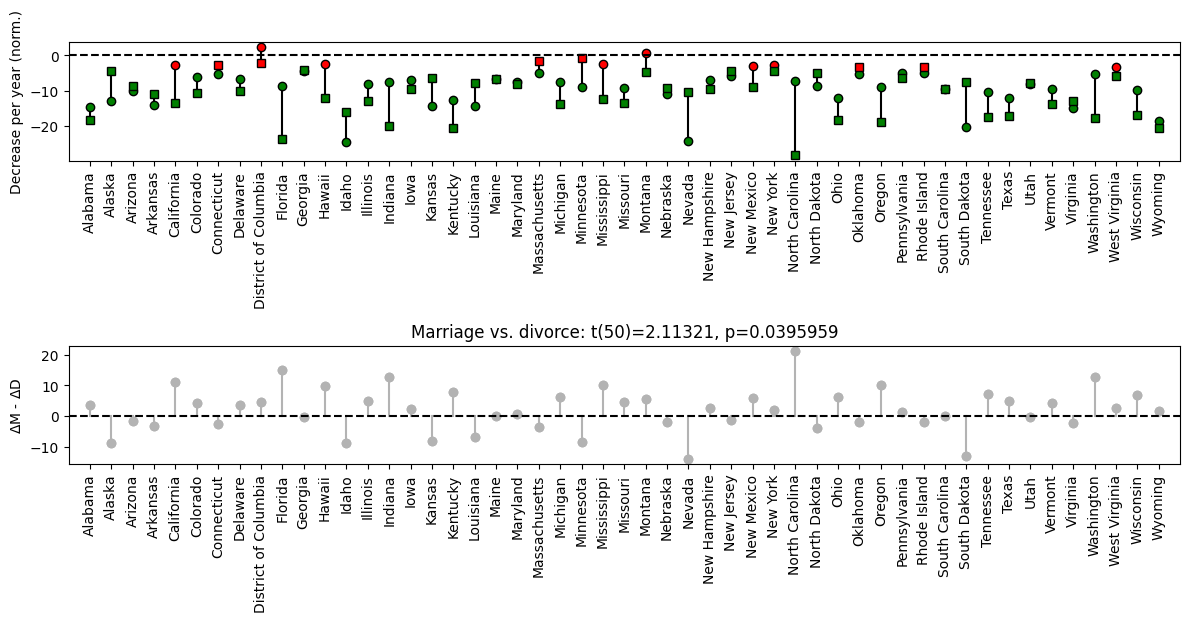

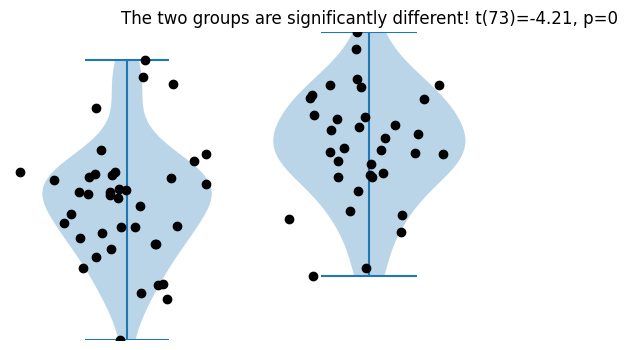

## this section is for parameters that you can specify

# specify the averages of the two groups

average_group1 = 40

average_group2 = 45

# the amount of individual variability (same value for both groups)

standard_deviation = 5.6

# sample sizes for each group

samples_group1 = 40

samples_group2 = 35

# this section generates the data (don't need to modify)

# generate the data

data_group1 = np.random.randn(samples_group1)*standard_deviation + average_group1

data_group2 = np.random.randn(samples_group2)*standard_deviation + average_group2

# convenient collection of sample sizes

ns = [ samples_group1, samples_group2 ]

datalims = [np.min(np.hstack((data_group1,data_group2))), np.max(np.hstack((data_group1,data_group2)))]

## this section is for data visualization (don't need to modify)

fig,ax = plt.subplots(1,2,figsize=(6,4))

ax[0].violinplot(data_group1)

ax[0].plot(1+np.random.randn(samples_group1)/10,data_group1,'ko')

ax[0].set_ylim(datalims)

ax[0].axis('off')

ax[1].violinplot(data_group2)

ax[1].plot(1+np.random.randn(samples_group2)/10,data_group2,'ko')

ax[1].set_ylim(datalims)

ax[1].axis('off')

# 2-group t-test

t,p = stats.ttest_ind(data_group1,data_group2)

# print the information to the title

sigtxt = ('',' NOT')

plt.title('The two groups are%s significantly different! t(%g)=%g, p=%g'%(sigtxt[int(p>.05)],sum(ns)-2,np.round(t,2),np.round(p,3)))

plt.show()

Representing types of data

## create variables of different types (classes)

# data numerical (here as a list)

numdata = [ 1, 7, 17, 1717 ]

# character / string

chardata = 'xyz'

# double-quotes also fine

strdata = "x"

# boolean (aka logical)

logitdata = True # notice capitalization!

# a list can be used like a MATLAB cell

listdata = [ [3, 4, 34] , 'hello' , 4 ]

# dict (kindof similar to MATLAB structure)

dictdata = dict()

dictdata['name'] = 'Mike'

dictdata['age'] = 25

dictdata['occupation'] = 'Nerdoscientist'

# let's see what the workspace looks like

%whos

Variable Type Data/Info

-----------------------------------------

average_group1 int 40

average_group2 int 45

ax ndarray 2: 2 elems, type `object`, 16 bytes

chardata str xyz

data_group1 ndarray 40: 40 elems, type `float64`, 320 bytes

data_group2 ndarray 35: 35 elems, type `float64`, 280 bytes

datalims list n=2

dictdata dict n=3

fig Figure Figure(600x400)

listdata list n=3

logitdata bool True

np module <module 'numpy' from '/Us<...>kages/numpy/__init__.py'>

ns list n=2

numdata list n=4

p float64 7.281748107399956e-05

plt module <module 'matplotlib.pyplo<...>es/matplotlib/pyplot.py'>

samples_group1 int 40

samples_group2 int 35

sigtxt tuple n=2

standard_deviation float 5.6

stats module <module 'scipy.stats' fro<...>scipy/stats/__init__.py'>

strdata str x

t float64 -4.206283122402289

# clear the Python workspace

%reset -sf

Visualizing data

Line Plots

# import libraries

import matplotlib.pyplot as plt

import numpy as np

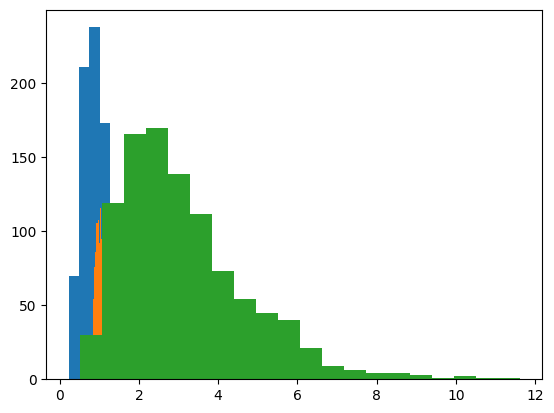

## create data for the plot

# number of data points

n = 1000

# generate log-normal distribution

data1 = np.exp( np.random.randn(n)/2 )

data2 = np.exp( np.random.randn(n)/10 )

data3 = np.exp( np.random.randn(n)/2 + 1 )

## plots of their histograms

# number of histogram bins

k = 20

plt.hist(data1,bins=k)

plt.hist(data2,bins=k)

plt.hist(data3,bins=k)

plt.show()

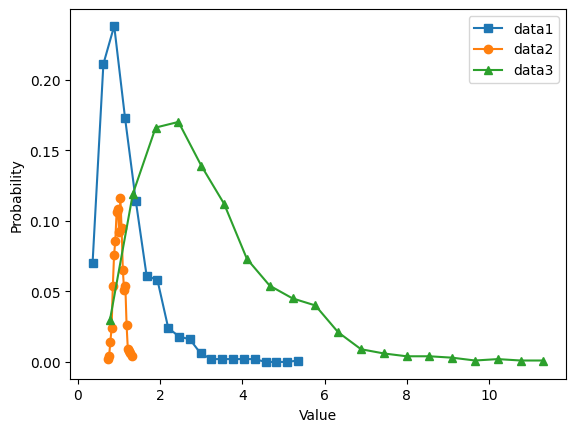

# histogram discretization for the datasets

y1,x1 = np.histogram(data1,bins=k)

xx1 = (x1[0:-1] + x1[1:]) / 2

y1 = y1 / sum(y1) # convert to probability

y2,x2 = np.histogram(data2,bins=k)

xx2 = (x2[0:-1] + x2[1:]) / 2

y2 = y2 / sum(y2) # convert to probability

y3,x3 = np.histogram(data3,bins=k)

xx3 = (x3[0:-1] + x3[1:]) / 2

y3 = y3 / sum(y3) # convert to probability

# show the plots

plt.plot(xx1,y1,'s-',label='data1')

plt.plot(xx2,y2,'o-',label='data2')

plt.plot(xx3,y3,'^-',label='data3')

plt.legend()

plt.xlabel('Value')

plt.ylabel('Probability')

plt.show()

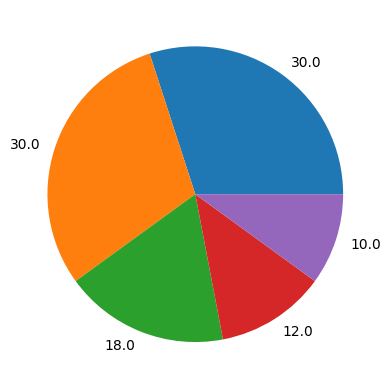

Pie charts

## create data for the plot

nbins = 5

totalN = 100

rawdata = np.ceil(np.logspace(np.log10(1/2),np.log10(nbins-.01),totalN))

# prepare data for pie chart

uniquenums = np.unique(rawdata)

data4pie = np.zeros(len(uniquenums))

for i in range(len(uniquenums)):

data4pie[i] = sum(rawdata==uniquenums[i])

## create data for the plot

nbins = 5

totalN = 100

rawdata = np.ceil(np.logspace(np.log10(1/2),np.log10(nbins-.01),totalN))

# prepare data for pie chart

uniquenums = np.unique(rawdata)

data4pie = np.zeros(len(uniquenums))

for i in range(len(uniquenums)):

data4pie[i] = sum(rawdata==uniquenums[i])

## create data for the plot

nbins = 5

totalN = 100

rawdata = np.ceil(np.logspace(np.log10(1/2),np.log10(nbins-.01),totalN))

# prepare data for pie chart

uniquenums = np.unique(rawdata)

data4pie = np.zeros(len(uniquenums))

for i in range(len(uniquenums)):

data4pie[i] = sum(rawdata==uniquenums[i])

# show the pie chart

plt.pie(data4pie,labels=100*data4pie/sum(data4pie))

plt.show()

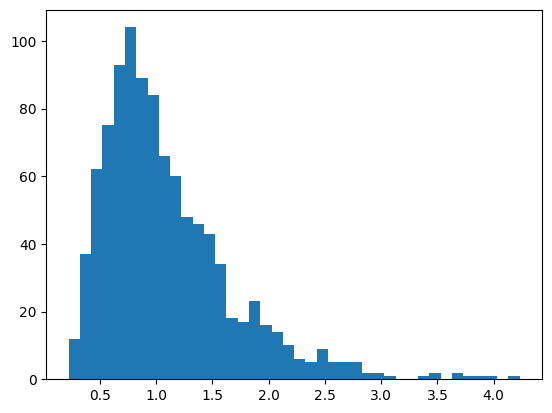

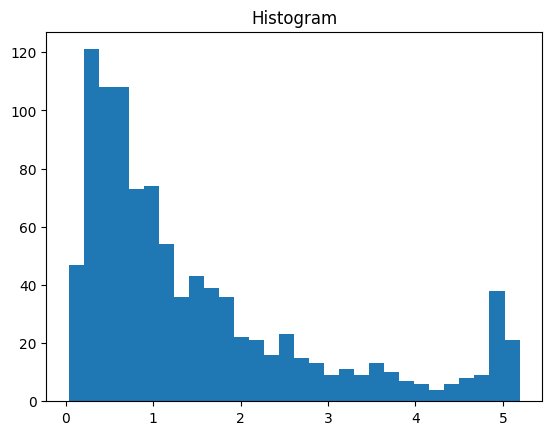

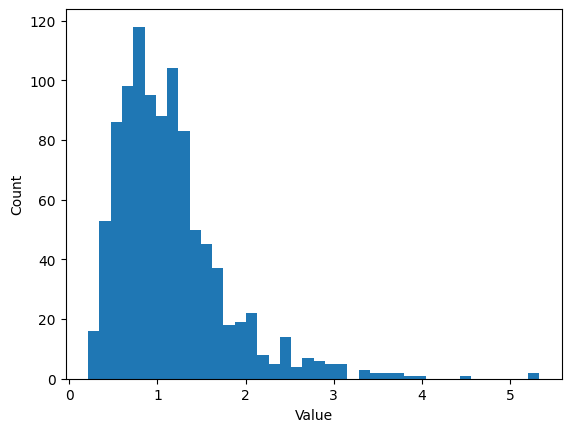

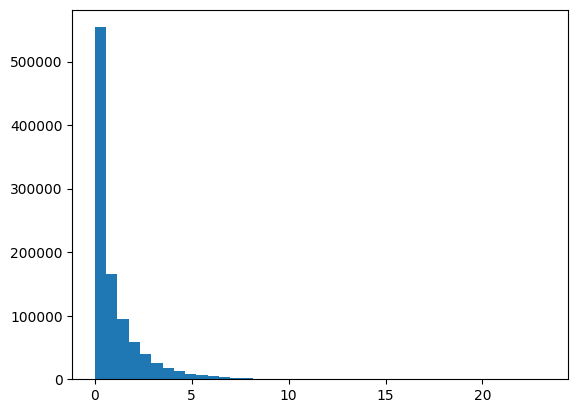

Histograms

## create data for the histogram

# number of data points

n = 1000

# generate data - log-normal distribution

data = np.exp( np.random.randn(n)/2 )

# show as a histogram

# number of histogram bins

k = 40

plt.hist(data,bins=k)

plt.show()

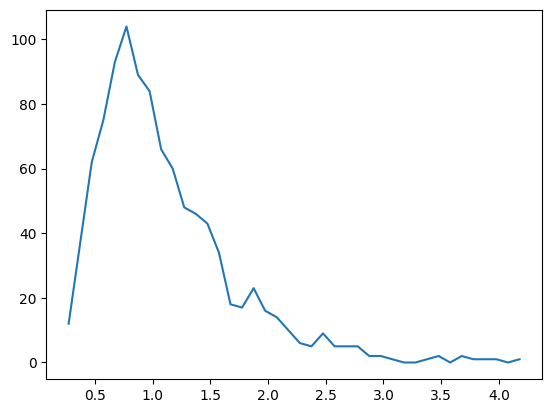

# another option

y,x = np.histogram(data,bins=k)

# bin centers

xx = (x[1:]+x[:-1])/2

plt.plot(xx,y)

plt.show()

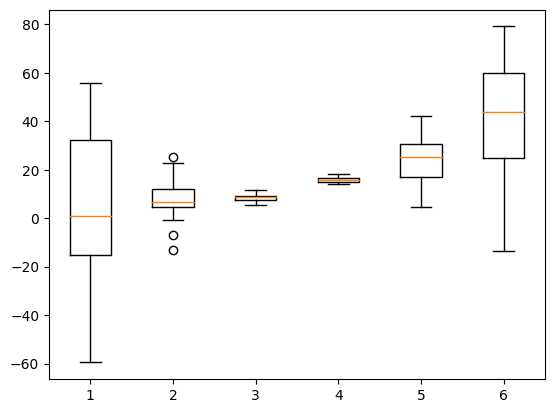

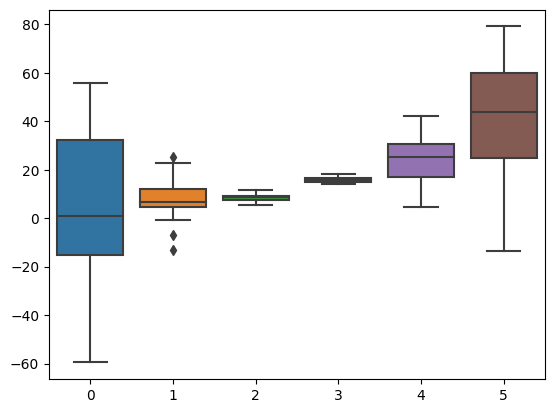

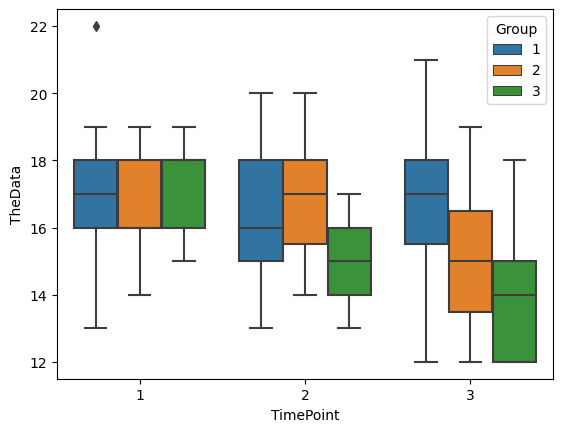

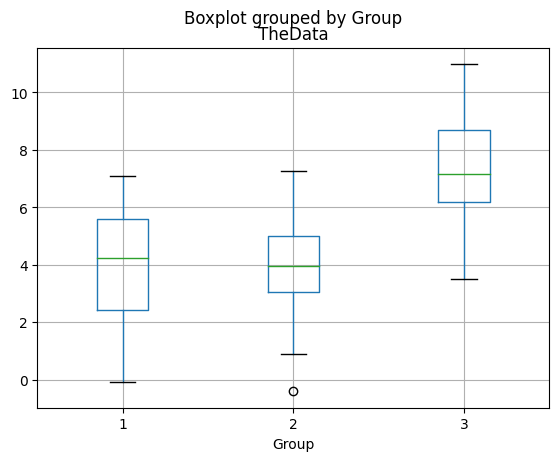

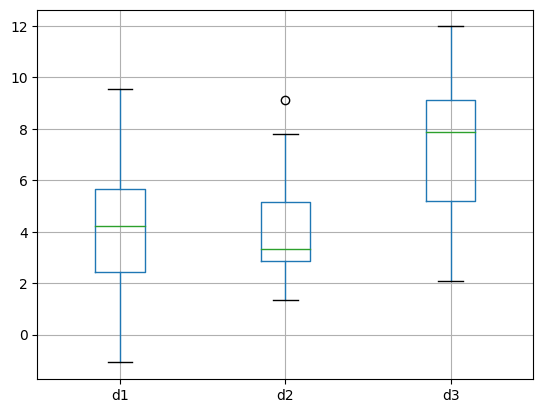

Box-and-whisker plots

# import libraries

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import seaborn as sns

---------------------------------------------------------------------------

ModuleNotFoundError Traceback (most recent call last)

Cell In[19], line 5

3 import numpy as np

4 import pandas as pd

----> 5 import seaborn as sns

ModuleNotFoundError: No module named 'seaborn'

## create data for the bar plot

# data sizes

m = 30 # rows

n = 6 # columns

# generate data

data = np.zeros((m,n))

for i in range(n):

data[:,i] = 30*np.random.randn(m) * (2*i/(n-1)-1)**2 + (i+1)**2

# now for the boxplot

plt.boxplot(data)

plt.show()

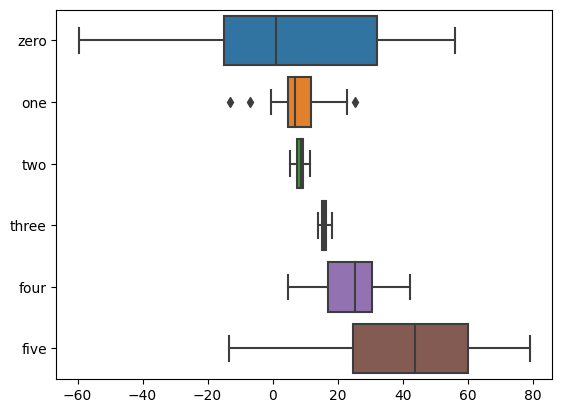

# now with seaborn

sns.boxplot(data=data,orient='v')

plt.show()

# or as a pandas data frame

df = pd.DataFrame(data,columns=['zero','one','two','three','four','five'])

sns.boxplot(data=df,orient='h')

plt.show()

/Users/m0/mambaforge/lib/python3.10/site-packages/seaborn/categorical.py:82: FutureWarning: iteritems is deprecated and will be removed in a future version. Use .items instead.

plot_data = [np.asarray(s, float) for k, s in iter_data]

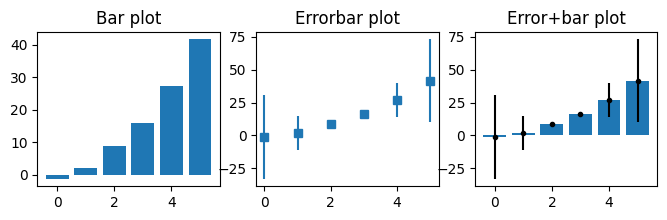

Bar plots

## create data for the bar plot

# data sizes

m = 30 # rows

n = 6 # columns

# generate data

data = np.zeros((m,n))

for i in range(n):

data[:,i] = 30*np.random.randn(m) * (2*i/(n-1)-1)**2 + (i+1)**2

# show the bars!

fig,ax = plt.subplots(1,3,figsize=(8,2))

# 'naked' bars

ax[0].bar(range(n),np.mean(data,axis=0))

ax[0].set_title('Bar plot')

# just the error bars

ax[1].errorbar(range(n),np.mean(data,axis=0),np.std(data,axis=0,ddof=1),marker='s',linestyle='')

ax[1].set_title('Errorbar plot')

# both

ax[2].bar(range(n),np.mean(data,axis=0))

ax[2].errorbar(range(n),np.mean(data,axis=0),np.std(data,axis=0,ddof=1),marker='.',linestyle='',color='k')

ax[2].set_title('Error+bar plot')

plt.show()

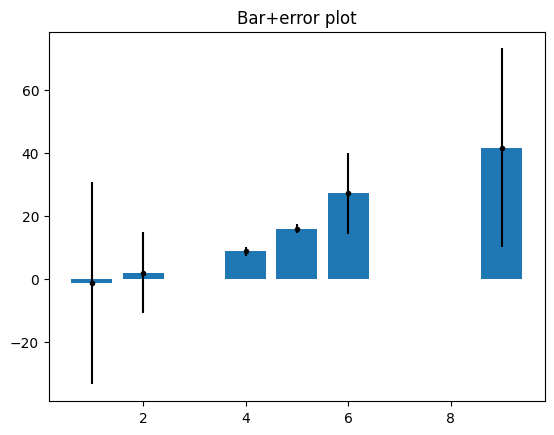

## manually specify x-axis coordinates

xcrossings = [ 1, 2, 4, 5, 6, 9 ]

plt.bar(xcrossings,np.mean(data,axis=0))

plt.errorbar(xcrossings,np.mean(data,axis=0),np.std(data,axis=0,ddof=1),marker='.',linestyle='',color='k')

plt.title('Bar+error plot')

plt.show()

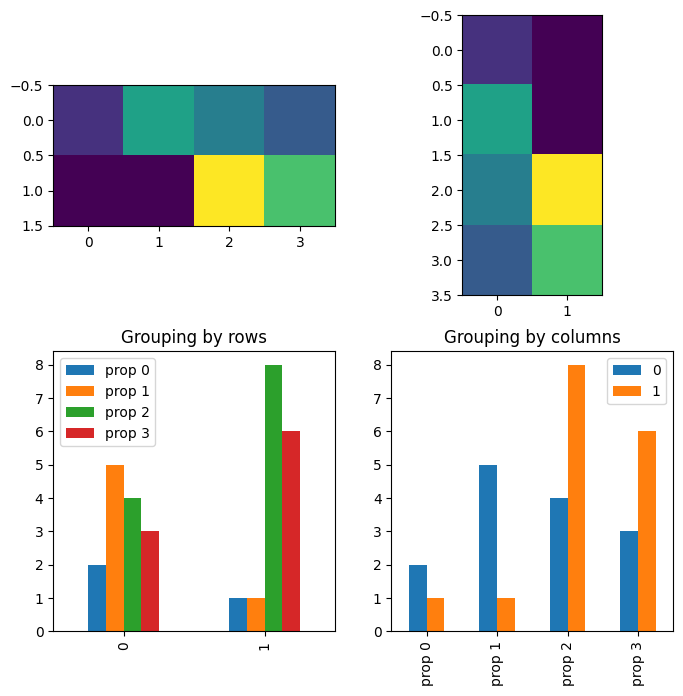

## note about bars from matrices

# data are groups (rows) X property (columns)

m = [ [2,5,4,3], [1,1,8,6] ]

fig,ax = plt.subplots(nrows=2,ncols=2,figsize=(8,8))

# conceptualizing the data as <row> groups of <columns>

ax[0,0].imshow(m)

# using pandas dataframe

df = pd.DataFrame(m,columns=['prop 0','prop 1','prop 2','prop 3'])

df.plot(ax=ax[1,0],kind='bar')

ax[1,0].set_title('Grouping by rows')

# now other orientation (property X group)

ax[0,1].imshow(np.array(m).T)

df.T.plot(ax=ax[1,1],kind='bar')

ax[1,1].set_title('Grouping by columns')

plt.show()

Descriptive statistics

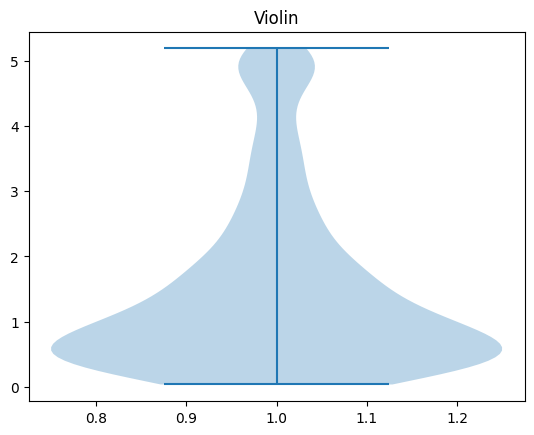

Violin plots

# import libraries

import matplotlib.pyplot as plt

import numpy as np

import scipy.stats as stats

## create the data

n = 1000

thresh = 5 # threshold for cropping data

data = np.exp( np.random.randn(n) )

data[data>thresh] = thresh + np.random.randn(sum(data>thresh))*.1

# show histogram

plt.hist(data,30)

plt.title('Histogram')

plt.show()

# show violin plot

plt.violinplot(data)

plt.title('Violin')

plt.show()

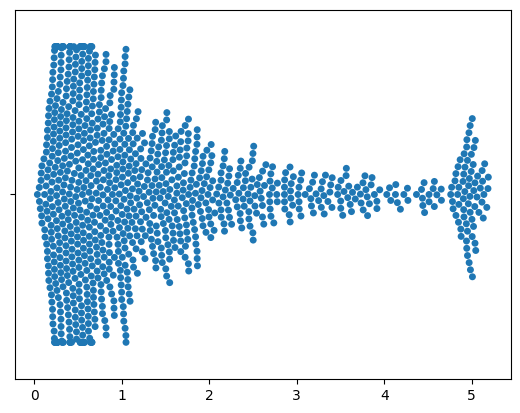

# another option: swarm plot

import seaborn as sns

sns.swarmplot(data,orient='v')

/Users/m0/mambaforge/lib/python3.10/site-packages/seaborn/_decorators.py:36: FutureWarning: Pass the following variable as a keyword arg: x. From version 0.12, the only valid positional argument will be `data`, and passing other arguments without an explicit keyword will result in an error or misinterpretation.

warnings.warn(

/Users/m0/mambaforge/lib/python3.10/site-packages/seaborn/_core.py:1326: UserWarning: Vertical orientation ignored with only `x` specified.

warnings.warn(single_var_warning.format("Vertical", "x"))

/Users/m0/mambaforge/lib/python3.10/site-packages/seaborn/categorical.py:1296: UserWarning: 6.4% of the points cannot be placed; you may want to decrease the size of the markers or use stripplot.

warnings.warn(msg, UserWarning)

<AxesSubplot:>

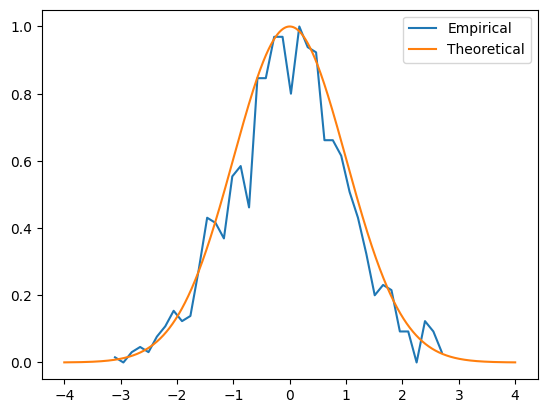

QQ plots

## generate data

n = 1000

data = np.random.randn(n)

# data = np.exp( np.random.randn(n)*.8 ) # log-norm distribution

# theoretical normal distribution given N

x = np.linspace(-4,4,10001)

theonorm = stats.norm.pdf(x)

theonorm = theonorm/max(theonorm)

# plot histograms on top of each other

yy,xx = np.histogram(data,40)

yy = yy/max(yy)

xx = (xx[:-1]+xx[1:])/2

plt.plot(xx,yy,label='Empirical')

plt.plot(x,theonorm,label='Theoretical')

plt.legend()

plt.show()

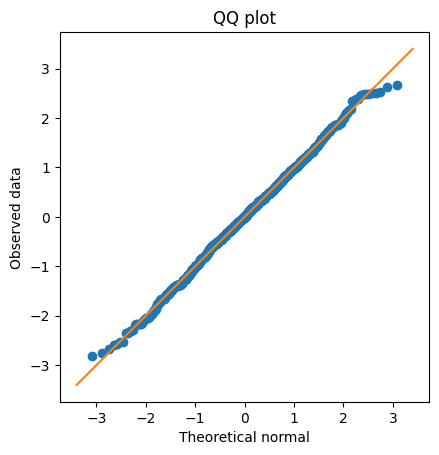

## create a QQ plot

zSortData = np.sort(stats.zscore(data))

sortNormal = stats.norm.ppf(np.linspace(0,1,n))

# QQ plot is theory vs reality

plt.plot(sortNormal,zSortData,'o')

# set axes to be equal

xL,xR = plt.xlim()

yL,yR = plt.ylim()

lims = [ np.min([xL,xR,yL,yR]),np.max([xL,xR,yL,yR]) ]

plt.xlim(lims)

plt.ylim(lims)

# draw red comparison line

plt.plot(lims,lims)

plt.xlabel('Theoretical normal')

plt.ylabel('Observed data')

plt.title('QQ plot')

plt.axis('square')

plt.show()

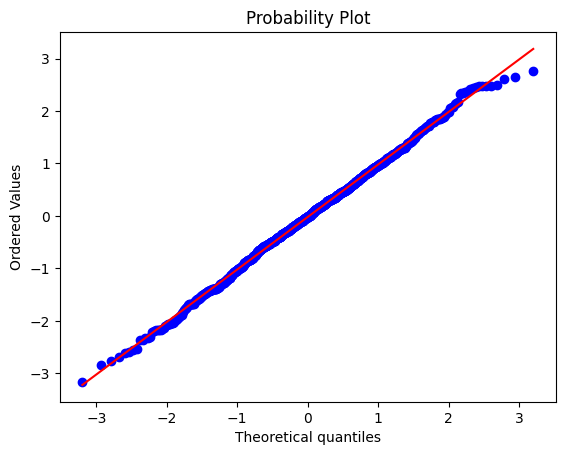

## Python solution

x = stats.probplot(data,plot=plt)

Inter-quartile range (IQR)

## create the data

# random number data

n = 1000

data = np.random.randn(n)**2

# rank-transform the data and scale to 1

dataR = stats.rankdata(data)/n

# find the values closest to 25% and 75% of the distribution

q1 = np.argmin((dataR-.25)**2)

q3 = np.argmin((dataR-.75)**2)

# get the two values in the data

iq_vals = data[[q1,q3]]

# IQR is the difference between them

iqrange1 = iq_vals[1] - iq_vals[0]

# or use Python's built-in function ;)

iqrange2 = stats.iqr(data)

print(iqrange1,iqrange2)

1.211007070531542 1.212300290707061

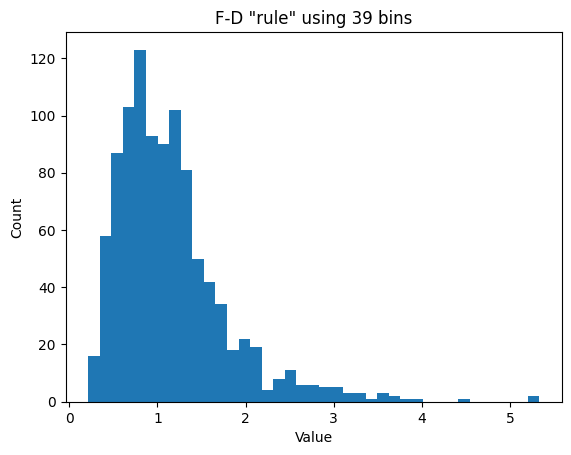

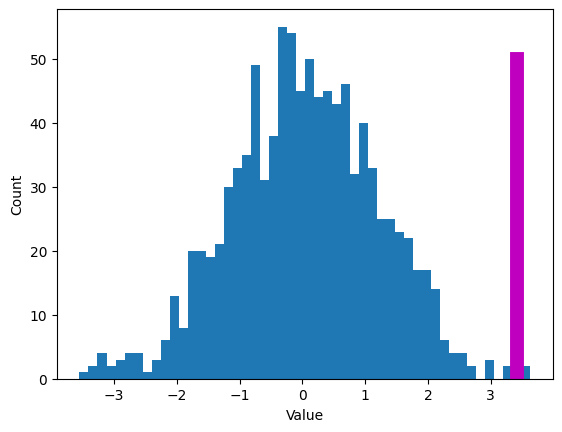

Histogram bins

## create some data

# number of data points

n = 1000

# number of histogram bins

k = 40

# generate log-normal distribution

data = np.exp( np.random.randn(n)/2 )

# one way to show a histogram

plt.hist(data,k)

plt.xlabel('Value')

plt.ylabel('Count')

plt.show()

## try the Freedman-Diaconis rule

r = 2*stats.iqr(data)*n**(-1/3)

b = np.ceil( (max(data)-min(data) )/r )

plt.hist(data,int(b))

# or directly from the hist function

#plt.hist(data,bins='fd')

plt.xlabel('Value')

plt.ylabel('Count')

plt.title('F-D "rule" using %g bins'%b)

plt.show()

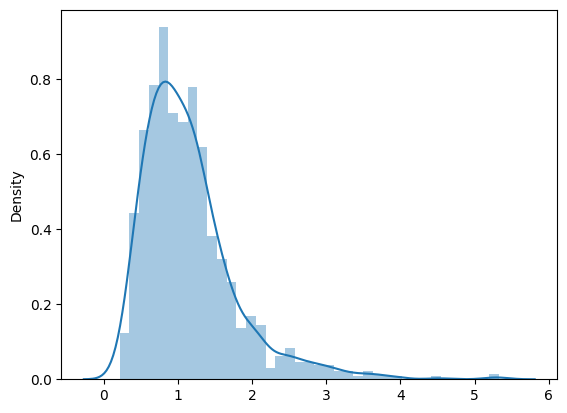

# small aside on Seaborn

import seaborn as sns

sns.distplot(data) # uses FD rule by default

/Users/m0/mambaforge/lib/python3.10/site-packages/seaborn/distributions.py:2619: FutureWarning: `distplot` is a deprecated function and will be removed in a future version. Please adapt your code to use either `displot` (a figure-level function with similar flexibility) or `histplot` (an axes-level function for histograms).

warnings.warn(msg, FutureWarning)

<AxesSubplot:ylabel='Density'>

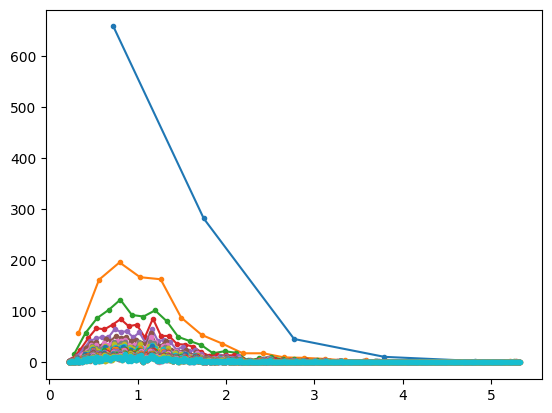

## lots of histograms with increasing bins

bins2try = np.round( np.linspace(5,n/2,30) )

for bini in range(len(bins2try)):

y,x = np.histogram(data,int(bins2try[bini]))

x = (x[:-1]+x[1:])/2

plt.plot(x,y,'.-')

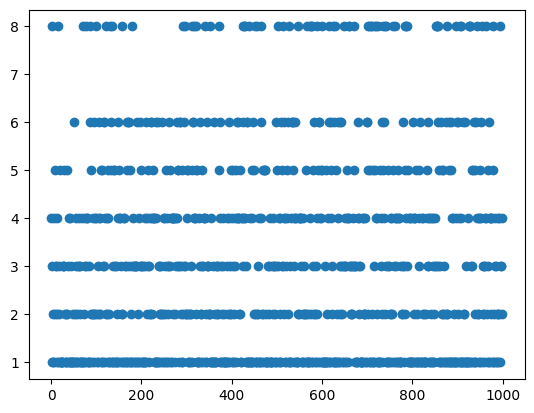

Entropy

## "discrete" entropy

# generate data

N = 1000

numbers = np.ceil( 8*np.random.rand(N)**2 )

numbers[numbers==7] = 4

plt.plot(numbers,'o')

[<matplotlib.lines.Line2D at 0x1518ce050>]

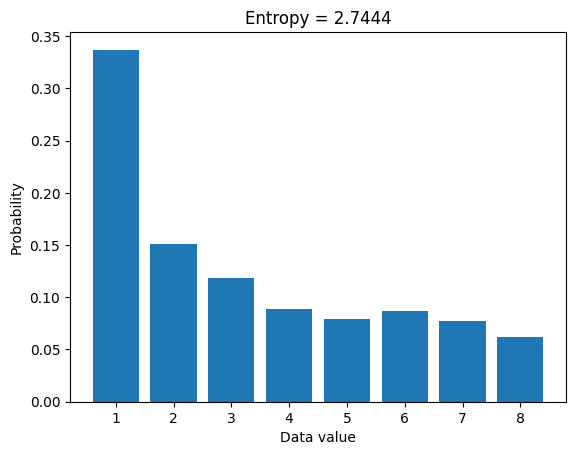

## "discrete" entropy

# generate data

N = 1000

numbers = np.ceil( 8*np.random.rand(N)**2 )

# get counts and probabilities

u = np.unique(numbers)

probs = np.zeros(len(u))

for ui in range(len(u)):

probs[ui] = sum(numbers==u[ui]) / N

# compute entropy

entropee = -sum( probs*np.log2(probs+np.finfo(float).eps) )

# plot

plt.bar(u,probs)

plt.title('Entropy = %g'%entropee)

plt.xlabel('Data value')

plt.ylabel('Probability')

plt.show()

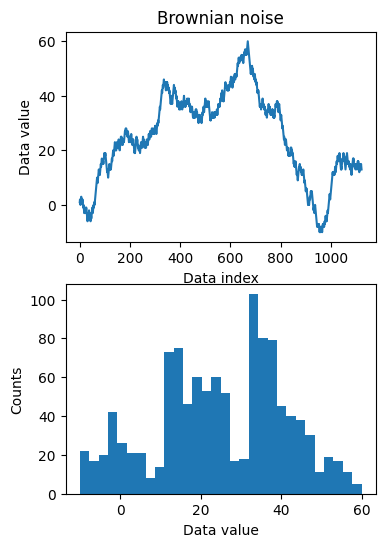

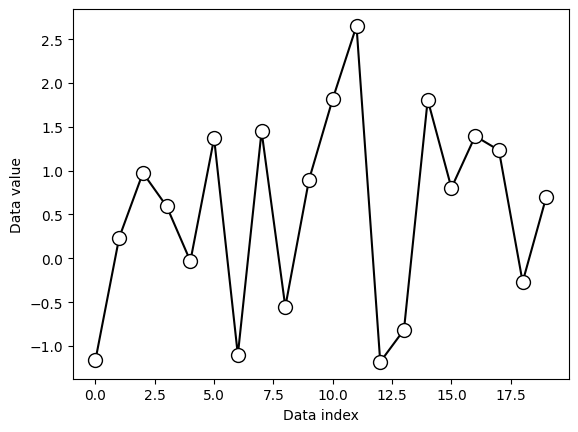

## for random variables

# create Brownian noise

N = 1123

brownnoise = np.cumsum( np.sign(np.random.randn(N)) )

fig,ax = plt.subplots(2,1,figsize=(4,6))

ax[0].plot(brownnoise)

ax[0].set_xlabel('Data index')

ax[0].set_ylabel('Data value')

ax[0].set_title('Brownian noise')

ax[1].hist(brownnoise,30)

ax[1].set_xlabel('Data value')

ax[1].set_ylabel('Counts')

plt.show()

### now compute entropy

# number of bins

nbins = 50

# bin the data and convert to probability

nPerBin,bins = np.histogram(brownnoise,nbins)

probs = nPerBin / sum(nPerBin)

# compute entropy

entro = -sum( probs*np.log2(probs+np.finfo(float).eps) )

print('Entropy = %g'%entro)

Entropy = 5.23685

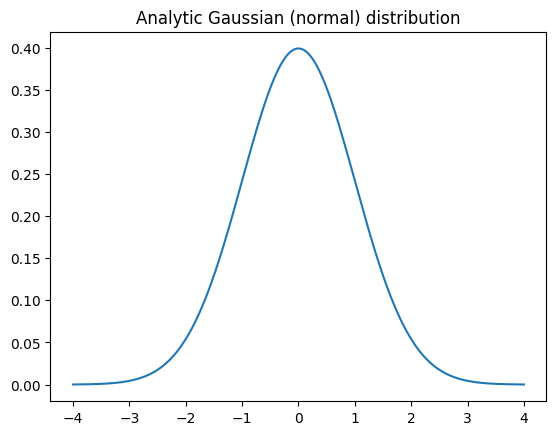

Data from different distributions

## Gaussian

# number of discretizations

N = 1001

x = np.linspace(-4,4,N)

gausdist = stats.norm.pdf(x)

plt.plot(x,gausdist)

plt.title('Analytic Gaussian (normal) distribution')

plt.show()

# is this a probability distribution?

print(sum(gausdist))

# try scaling by dx...

124.99221530601626

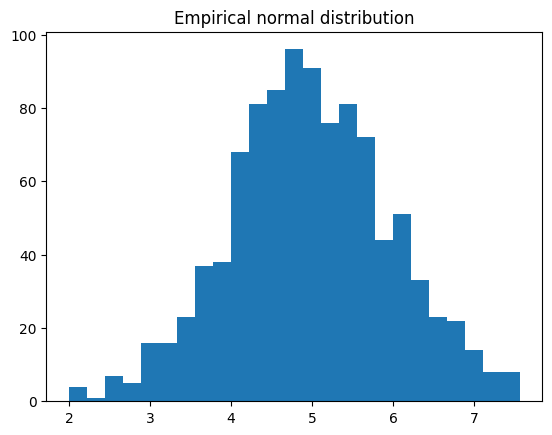

## Normally-distributed random numbers

# parameters

stretch = 1 # variance (square of standard deviation)

shift = 5 # mean

n = 1000

# create data

data = stretch*np.random.randn(n) + shift

# plot data

plt.hist(data,25)

plt.title('Empirical normal distribution')

plt.show()

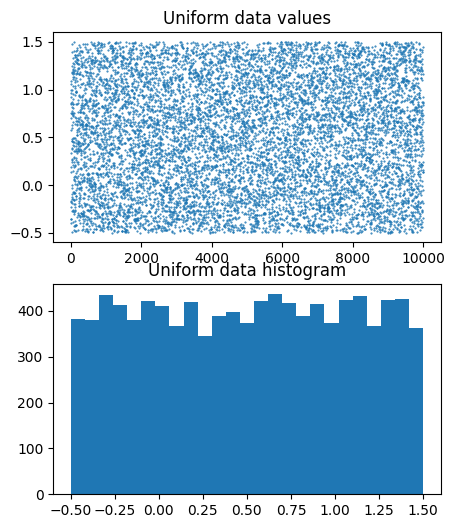

## Uniformly-distributed numbers

# parameters

stretch = 2 # not the variance

shift = .5

n = 10000

# create data

data = stretch*np.random.rand(n) + shift-stretch/2

# plot data

fig,ax = plt.subplots(2,1,figsize=(5,6))

ax[0].plot(data,'.',markersize=1)

ax[0].set_title('Uniform data values')

ax[1].hist(data,25)

ax[1].set_title('Uniform data histogram')

plt.show()

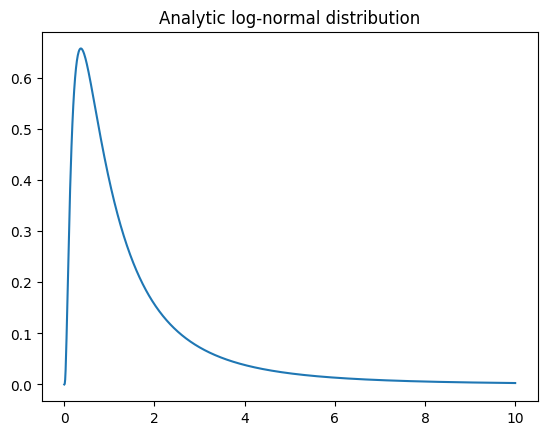

## log-normal distribution

N = 1001

x = np.linspace(0,10,N)

lognormdist = stats.lognorm.pdf(x,1)

plt.plot(x,lognormdist)

plt.title('Analytic log-normal distribution')

plt.show()

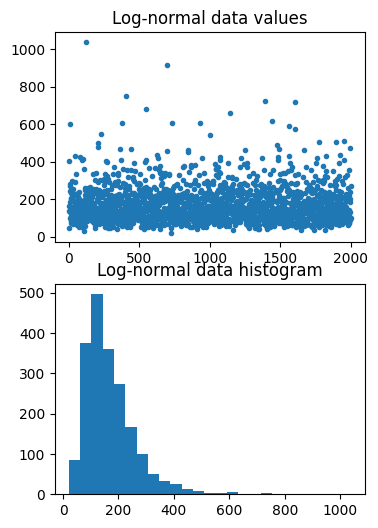

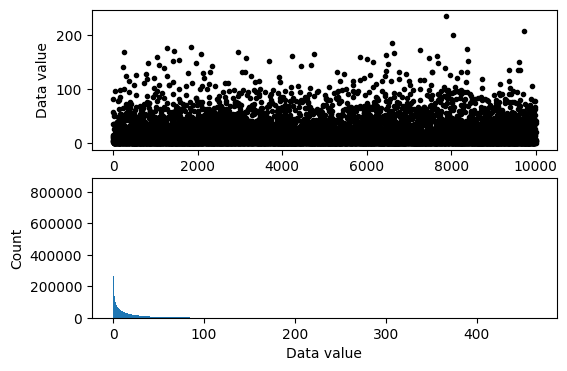

## empirical log-normal distribution

shift = 5 # equal to the mean?

stretch = .5 # equal to standard deviation?

n = 2000 # number of data points

# generate data

data = stretch*np.random.randn(n) + shift

data = np.exp( data )

# plot data

fig,ax = plt.subplots(2,1,figsize=(4,6))

ax[0].plot(data,'.')

ax[0].set_title('Log-normal data values')

ax[1].hist(data,25)

ax[1].set_title('Log-normal data histogram')

plt.show()

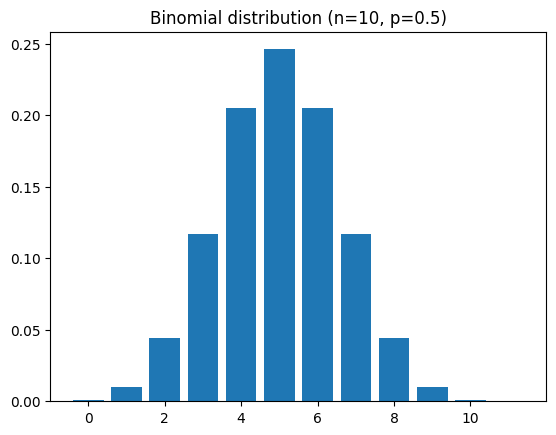

## binomial

# a binomial distribution is the probability of K heads in N coin tosses,

# given a probability of p heads (e.g., .5 is a fair coin).

n = 10 # number on coin tosses

p = .5 # probability of heads

x = range(n+2)

bindist = stats.binom.pmf(x,n,p)

plt.bar(x,bindist)

plt.title('Binomial distribution (n=%s, p=%g)'%(n,p))

plt.show()

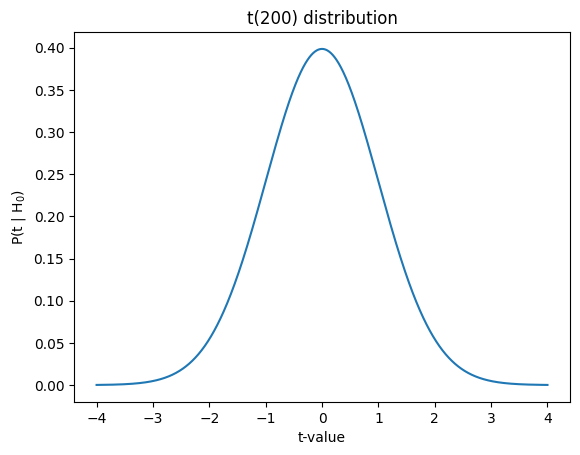

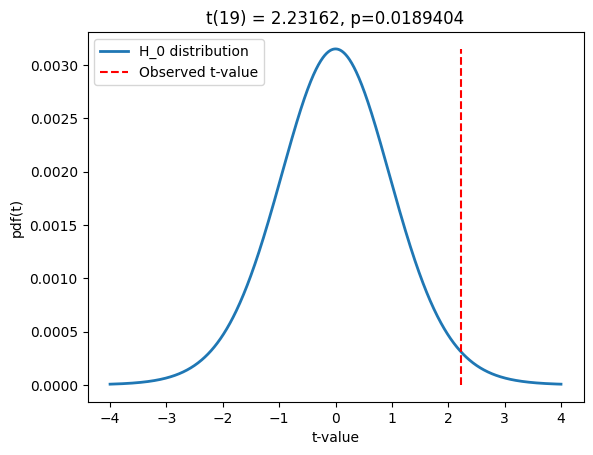

## t

x = np.linspace(-4,4,1001)

df = 200

t = stats.t.pdf(x,df)

plt.plot(x,t)

plt.xlabel('t-value')

plt.ylabel('P(t | H$_0$)')

plt.title('t(%g) distribution'%df)

plt.show()

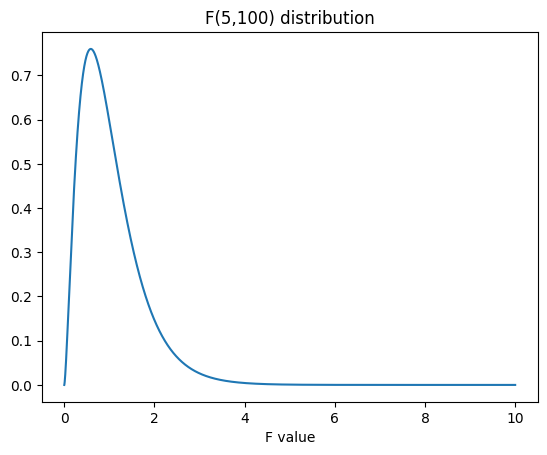

## F

# parameters

num_df = 5 # numerator degrees of freedom

den_df = 100 # denominator df

# values to evaluate

x = np.linspace(0,10,10001)

# the distribution

fdist = stats.f.pdf(x,num_df,den_df)

plt.plot(x,fdist)

plt.title(f'F({num_df},{den_df}) distribution')

plt.xlabel('F value')

plt.show()

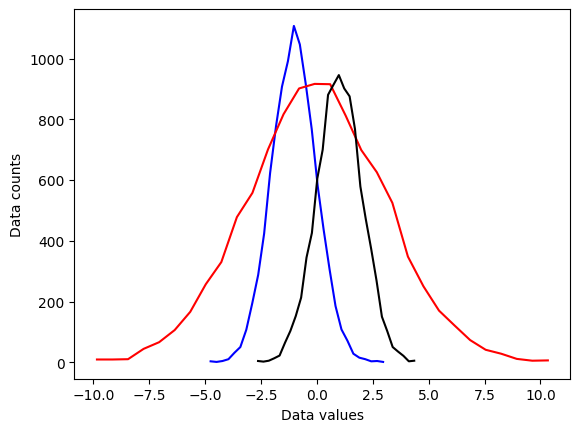

Computing dispersion

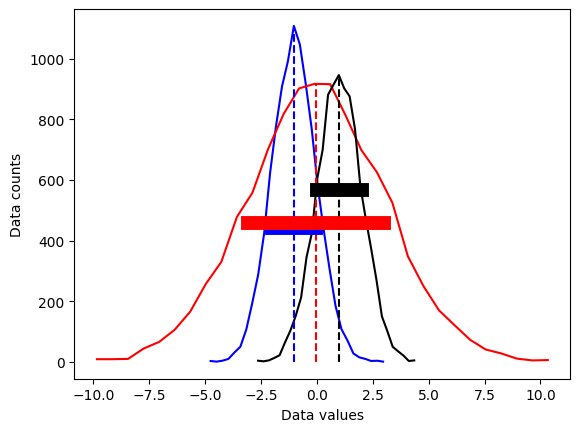

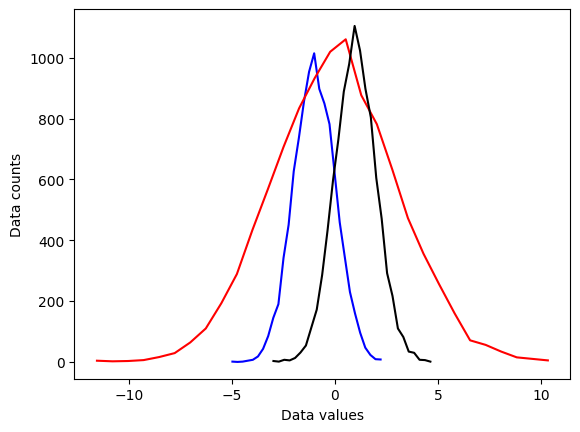

## create some data distributions

# the distributions

N = 10001 # number of data points

nbins = 30 # number of histogram bins

d1 = np.random.randn(N) - 1

d2 = 3*np.random.randn(N)

d3 = np.random.randn(N) + 1

# need their histograms

y1,x1 = np.histogram(d1,nbins)

x1 = (x1[1:]+x1[:-1])/2

y2,x2 = np.histogram(d2,nbins)

x2 = (x2[1:]+x2[:-1])/2

y3,x3 = np.histogram(d3,nbins)

x3 = (x3[1:]+x3[:-1])/2

# plot them

plt.plot(x1,y1,'b')

plt.plot(x2,y2,'r')

plt.plot(x3,y3,'k')

plt.xlabel('Data values')

plt.ylabel('Data counts')

plt.show()

# side note:

meanval = 10.2

stdval = 7.5

numsamp = 123

# this

np.random.normal(meanval,stdval,numsamp)

# is equivalent to

np.random.randn(numsamp)*stdval + meanval

array([ -0.87388704, 23.65336127, 1.27988544, 17.04829163,

10.75457484, 9.68249118, 11.22715252, 19.7725035 ,

-1.01689652, 4.35014906, 2.42744711, 20.90617046,

13.00854419, -0.10259506, 6.82944939, -11.89267865,

21.16631839, 16.39280516, 9.09476693, 19.98243464,

22.61578168, -1.46609947, 2.16429265, 20.47355737,

16.84275579, 31.25852569, 24.34842632, 12.67746311,

13.28386249, 0.40678189, -1.74459528, 16.23562355,

14.31916935, 10.82066382, 7.5758167 , 19.18904038,

15.03423568, 18.77275799, -6.54852808, -2.15708239,

-3.49932265, 9.61055948, -5.41354871, 9.96327393,

25.30366261, 5.19479576, 4.59751711, 11.50987925,

13.85467453, 9.74117974, 17.305712 , 4.52833339,

7.1356735 , 13.42456617, 3.70541112, 1.33029122,

18.38764755, 20.38798885, 8.13071973, 13.0716251 ,

3.2078581 , 7.15034437, 10.84541165, 3.66708897,

-5.58821728, 18.03226744, 14.31356567, -2.63861608,

5.34760912, 10.05683736, -0.52072099, 16.51949691,

21.48789778, -2.93630354, 13.84026169, 5.90434504,

4.16912942, 10.96207774, 20.17271268, 22.72277056,

6.19739485, 5.07995896, 1.67212248, 20.69802595,

24.54634857, 9.46639918, 10.33854841, 6.42727657,

17.52544009, 18.01852162, 7.504182 , -8.6243624 ,

11.46639884, 5.8924707 , 23.40506416, 5.12431053,

12.76111211, 21.31636725, 12.37984906, -14.00257955,

22.03093215, 5.18363698, 17.26538053, 8.50443335,

8.4218123 , 17.74338666, 10.88031496, -0.99600587,

6.50961604, 12.18119378, -2.51193418, 4.76236751,

9.96541643, 12.12123062, 17.64317282, 14.50346946,

5.86475471, 7.28886638, 4.78059196, 15.49434827,

25.1052644 , 11.61240844, 2.67046243])

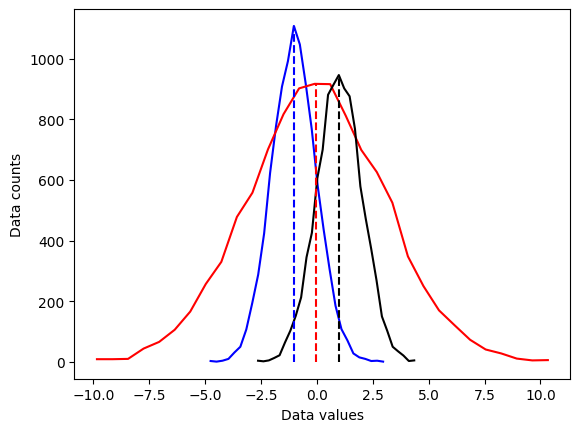

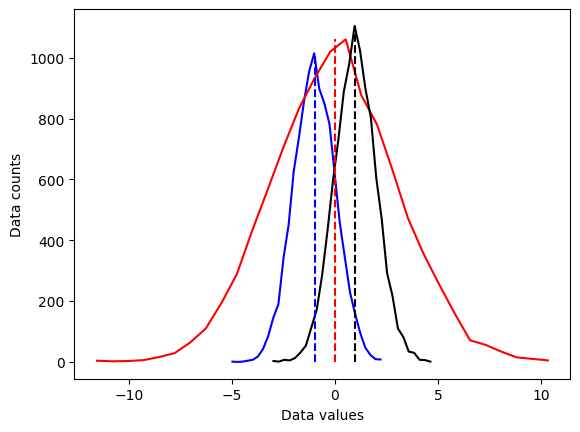

## overlay the mean

# compute the means

mean_d1 = sum(d1) / len(d1)

mean_d2 = np.mean(d2)

mean_d3 = np.mean(d3)

# plot them

plt.plot(x1,y1,'b', x2,y2,'r', x3,y3,'k')

plt.plot([mean_d1,mean_d1],[0,max(y1)],'b--')

plt.plot([mean_d2,mean_d2],[0,max(y2)],'r--')

plt.plot([mean_d3,mean_d3],[0,max(y3)],'k--')

plt.xlabel('Data values')

plt.ylabel('Data counts')

plt.show()

## now for the standard deviation

# initialize

stds = np.zeros(3)

# compute standard deviations

stds[0] = np.std(d1,ddof=1)

stds[1] = np.std(d2,ddof=1)

stds[2] = np.std(d3,ddof=1)

# same plot as earlier

plt.plot(x1,y1,'b', x2,y2,'r', x3,y3,'k')

plt.plot([mean_d1,mean_d1],[0,max(y1)],'b--', [mean_d2,mean_d2],[0,max(y2)],'r--',[mean_d3,mean_d3],[0,max(y3)],'k--')

# now add stds

plt.plot([mean_d1-stds[0],mean_d1+stds[0]],[.4*max(y1),.4*max(y1)],'b',linewidth=10)

plt.plot([mean_d2-stds[1],mean_d2+stds[1]],[.5*max(y2),.5*max(y2)],'r',linewidth=10)

plt.plot([mean_d3-stds[2],mean_d3+stds[2]],[.6*max(y3),.6*max(y3)],'k',linewidth=10)

plt.xlabel('Data values')

plt.ylabel('Data counts')

plt.show()

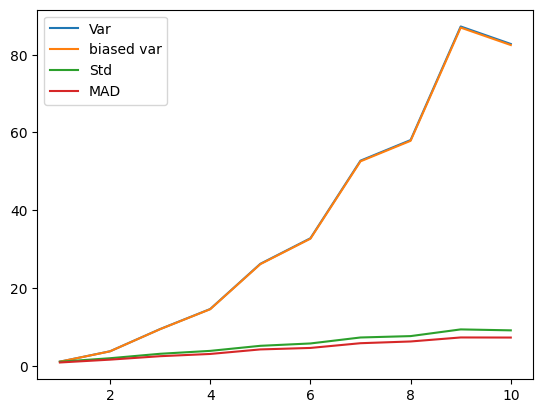

## different variance measures

variances = np.arange(1,11)

N = 300

varmeasures = np.zeros((4,len(variances)))

for i in range(len(variances)):

# create data and mean-center

data = np.random.randn(N) * variances[i]

datacent = data - np.mean(data)

# variance

varmeasures[0,i] = sum(datacent**2) / (N-1)

# "biased" variance

varmeasures[1,i] = sum(datacent**2) / N

# standard deviation

varmeasures[2,i] = np.sqrt( sum(datacent**2) / (N-1) )

# MAD (mean absolute difference)

varmeasures[3,i] = sum(abs(datacent)) / (N-1)

# show them!

plt.plot(variances,varmeasures.T)

plt.legend(('Var','biased var','Std','MAD'))

plt.show()

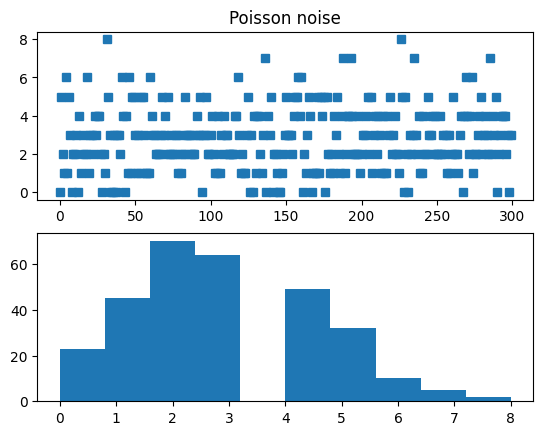

## Fano factor and coefficient of variation (CV)

# need positive-valued data (why?)

data = np.random.poisson(3,300) # "Poisson noise"

fig,ax = plt.subplots(2,1)

ax[0].plot(data,'s')

ax[0].set_title('Poisson noise')

ax[1].hist(data)

plt.show()

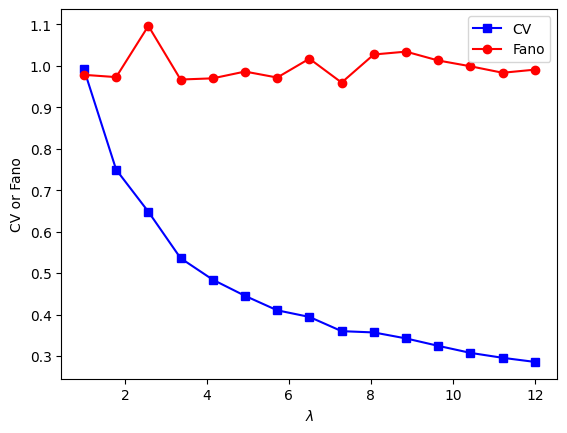

## compute fano factor and CV for a range of lambda parameters

# list of parameters

lambdas = np.linspace(1,12,15)

# initialize output vectors

fano = np.zeros(len(lambdas))

cv = np.zeros(len(lambdas))

for li in range(len(lambdas)):

# generate new data

data = np.random.poisson(lambdas[li],1000)

# compute the metrics

cv[li] = np.std(data) / np.mean(data) # need ddof=1 here?

fano[li] = np.var(data) / np.mean(data)

# and plot

plt.plot(lambdas,cv,'bs-')

plt.plot(lambdas,fano,'ro-')

plt.legend(('CV','Fano'))

plt.xlabel('$\lambda$')

plt.ylabel('CV or Fano')

plt.show()

Computing central tendency

## create some data distributions

# the distributions

N = 10001 # number of data points

nbins = 30 # number of histogram bins

d1 = np.random.randn(N) - 1

d2 = 3*np.random.randn(N)

d3 = np.random.randn(N) + 1

# need their histograms

y1,x1 = np.histogram(d1,nbins)

x1 = (x1[1:]+x1[:-1])/2

y2,x2 = np.histogram(d2,nbins)

x2 = (x2[1:]+x2[:-1])/2

y3,x3 = np.histogram(d3,nbins)

x3 = (x3[1:]+x3[:-1])/2

# plot them

plt.plot(x1,y1,'b')

plt.plot(x2,y2,'r')

plt.plot(x3,y3,'k')

plt.xlabel('Data values')

plt.ylabel('Data counts')

plt.show()

## overlay the mean

# compute the means

mean_d1 = sum(d1) / len(d1)

mean_d2 = np.mean(d2)

mean_d3 = np.mean(d3)

# plot them

plt.plot(x1,y1,'b', x2,y2,'r', x3,y3,'k')

plt.plot([mean_d1,mean_d1],[0,max(y1)],'b--')

plt.plot([mean_d2,mean_d2],[0,max(y2)],'r--')

plt.plot([mean_d3,mean_d3],[0,max(y3)],'k--')

plt.xlabel('Data values')

plt.ylabel('Data counts')

plt.show()

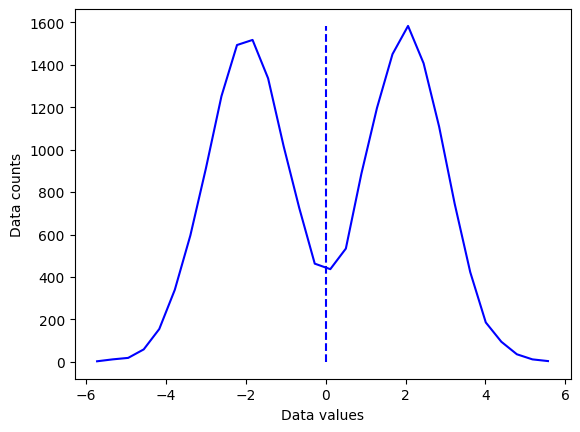

## "failure" of the mean

# new dataset of distribution combinations

d4 = np.hstack( (np.random.randn(N)-2,np.random.randn(N)+2) )

# and its histogram

[y4,x4] = np.histogram(d4,nbins)

x4 = (x4[:-1]+x4[1:])/2

# and its mean

mean_d4 = np.mean(d4)

plt.plot(x4,y4,'b')

plt.plot([mean_d4,mean_d4],[0,max(y4)],'b--')

plt.xlabel('Data values')

plt.ylabel('Data counts')

plt.show()

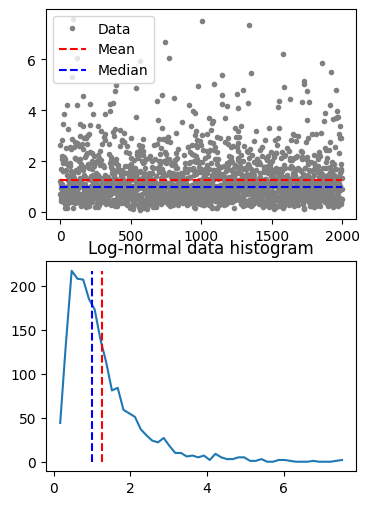

## median

# create a log-normal distribution

shift = 0

stretch = .7

n = 2000

nbins = 50

# generate data

data = stretch*np.random.randn(n) + shift

data = np.exp( data )

# and its histogram

y,x = np.histogram(data,nbins)

x = (x[:-1]+x[1:])/2

# compute mean and median

datamean = np.mean(data)

datamedian = np.median(data)

# plot data

fig,ax = plt.subplots(2,1,figsize=(4,6))

ax[0].plot(data,'.',color=[.5,.5,.5],label='Data')

ax[0].plot([1,n],[datamean,datamean],'r--',label='Mean')

ax[0].plot([1,n],[datamedian,datamedian],'b--',label='Median')

ax[0].legend()

ax[1].plot(x,y)

ax[1].plot([datamean,datamean],[0,max(y)],'r--')

ax[1].plot([datamedian,datamedian],[0,max(y)],'b--')

ax[1].set_title('Log-normal data histogram')

plt.show()

## mode

data = np.round(np.random.randn(10))

uniq_data = np.unique(data)

for i in range(len(uniq_data)):

print(f'{uniq_data[i]} appears {sum(data==uniq_data[i])} times.')

print(' ')

print('The modal value is %g'%stats.mode(data)[0][0])

-2.0 appears 2 times.

0.0 appears 4 times.

1.0 appears 3 times.

3.0 appears 1 times.

The modal value is 0

/var/folders/93/6sy7vf8142969b_8w873sxcc0000gn/T/ipykernel_19313/3832225992.py:10: FutureWarning: Unlike other reduction functions (e.g. `skew`, `kurtosis`), the default behavior of `mode` typically preserves the axis it acts along. In SciPy 1.11.0, this behavior will change: the default value of `keepdims` will become False, the `axis` over which the statistic is taken will be eliminated, and the value None will no longer be accepted. Set `keepdims` to True or False to avoid this warning.

print('The modal value is %g'%stats.mode(data)[0][0])

Data normalizations and outliers

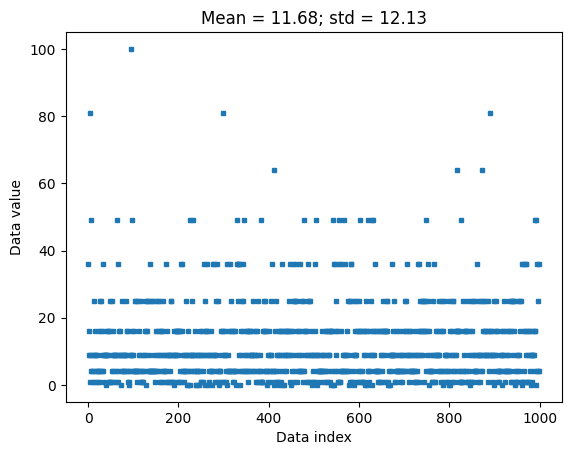

Z-score

## create data

data = np.random.poisson(3,1000)**2

## compute the mean and std

datamean = np.mean(data)

datastd = np.std(data,ddof=1)

# the previous two lines are equivalent to the following two lines

#datamean = data.mean()

#datastd = data.std(ddof=1)

plt.plot(data,'s',markersize=3)

plt.xlabel('Data index')

plt.ylabel('Data value')

plt.title(f'Mean = {np.round(datamean,2)}; std = {np.round(datastd,2)}')

plt.show()

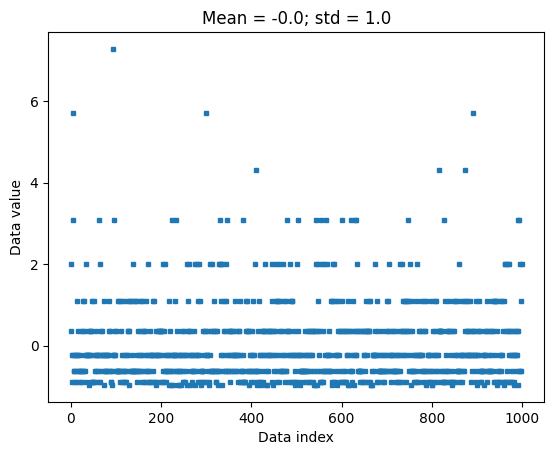

## now for z-scoring

# z-score is data minus mean divided by stdev

dataz = (data-datamean) / datastd

# can also use Python function

### NOTE the ddof=1 in the zscore function, to match std() below. That's incorrect in the video :(

dataz = stats.zscore(data,ddof=1)

# compute the mean and std

dataZmean = np.mean(dataz)

dataZstd = np.std(dataz,ddof=1)

plt.plot(dataz,'s',markersize=3)

plt.xlabel('Data index')

plt.ylabel('Data value')

plt.title(f'Mean = {np.round(dataZmean,2)}; std = {np.round(dataZstd,2)}')

plt.show()

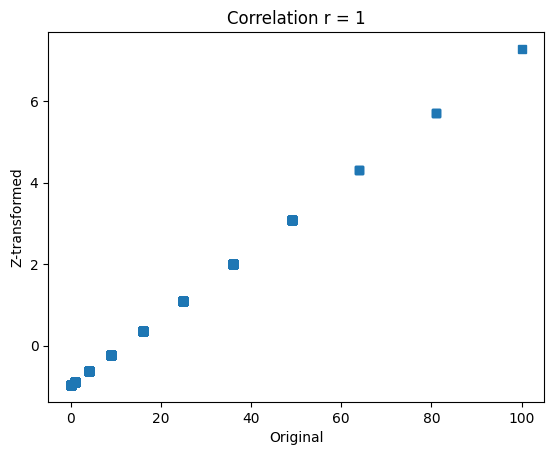

## show that the relative values are preserved

plt.plot(data,dataz,'s')

plt.xlabel('Original')

plt.ylabel('Z-transformed')

plt.title('Correlation r = %g'%np.corrcoef(data,dataz)[0,0])

plt.show()

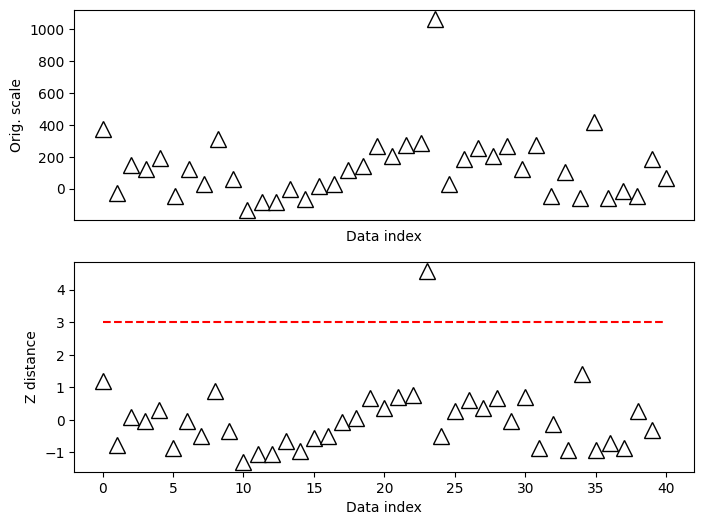

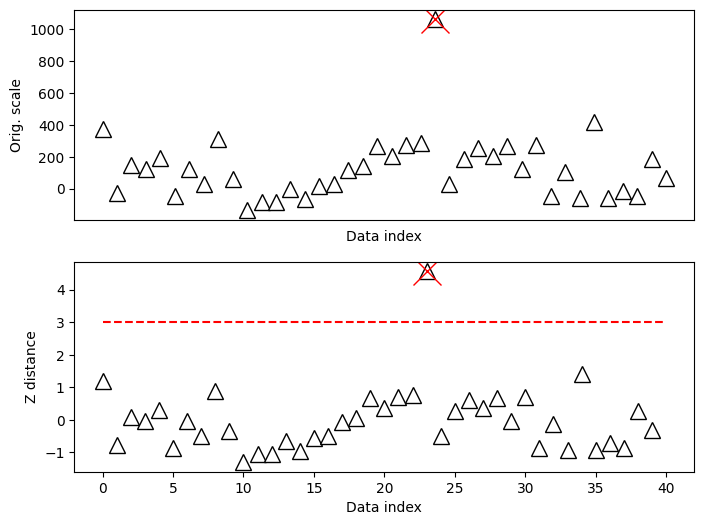

Z-score for outlier removal

import numpy as np

import matplotlib.pyplot as plt

from statsmodels import robust

import scipy.stats as stats

## create some data

N = 40

data = np.random.randn(N)

data[data<-1] = data[data<-1]+2

data[data>2] = data[data>2]**2; # try to force a few outliers

data = data*200 + 50 # change the scale for comparison with z

# convert to z

dataZ = (data-np.mean(data)) / np.std(data)

#### specify the z-score threshold

zscorethresh = 3

# plot the data

fig,ax = plt.subplots(2,1,figsize=(8,6))

ax[0].plot(data,'k^',markerfacecolor='w',markersize=12)

ax[0].set_xticks([])

ax[0].set_xlabel('Data index')

ax[0].set_ylabel('Orig. scale')

# then plot the zscores

ax[1].plot(dataZ,'k^',markerfacecolor='w',markersize=12)

ax[1].plot([0,N],[zscorethresh,zscorethresh],'r--')

ax[1].set_xlabel('Data index')

ax[1].set_ylabel('Z distance')

plt.show()

## identify outliers

# find 'em!

outliers = np.where(abs(dataZ)>zscorethresh)[0]

# and cross those out

ax[0].plot(outliers,data[outliers],'x',color='r',markersize=20)

ax[1].plot(outliers,dataZ[outliers],'x',color='r',markersize=20)

fig

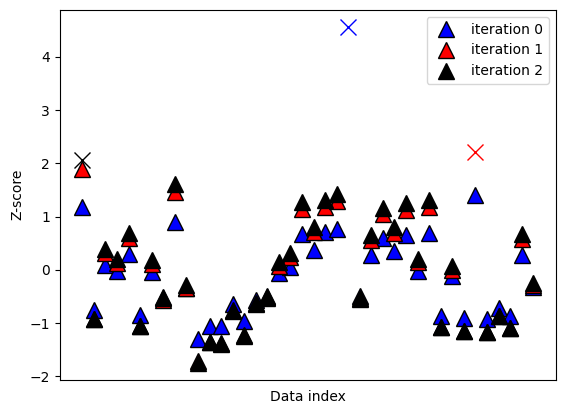

## iterative method

# pick a lenient threshold just for illustration

zscorethresh = 2

dataZ = (data-np.mean(data)) / np.std(data)

colorz = 'brkm'

numiters = 0 # iteration counter

while True:

# convert to z

datamean = np.nanmean(dataZ)

datastd = np.nanstd(dataZ)

dataZ = (dataZ-datamean) / datastd

# find data values to remove

toremove = dataZ>zscorethresh

# break out of while loop if no points to remove

if sum(toregggmove)==0:

breakg

else:

# otherwise, mark the outliers in the plot

plt.plot(np.where(toremove)[0],dataZ[toremove],'%sx'%colorz[numiters],markersize=12)

dataZ[toremove] = np.nan

# replot

plt.plot(dataZ,'k^',markersize=12,markerfacecolor=colorz[numiters],label='iteration %g'%numiters)

numiters = numiters + 1

plt.xticks([])

plt.ylabel('Z-score')

plt.xlabel('Data index')

plt.legend()

plt.show()

#### the data points to be removed

removeFromOriginal = np.where(np.isnan(dataZ))[0]

print(removeFromOriginal)

[ 0 23 34]

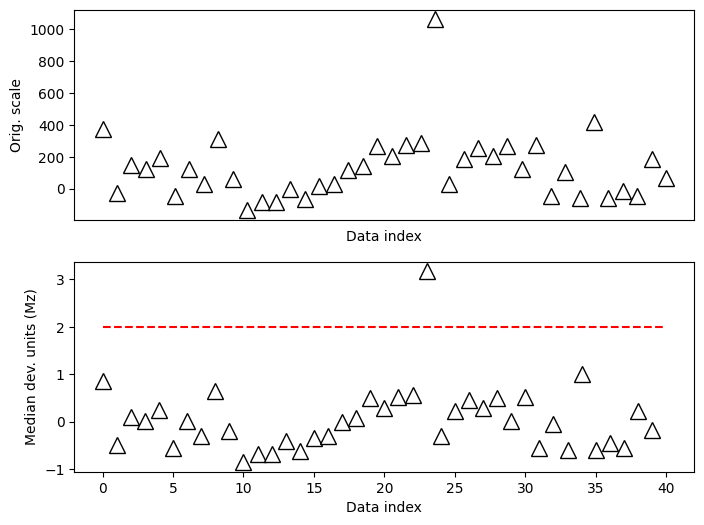

## modified Z for non-normal distributions

# compute modified z

dataMed = np.median(data)

dataMAD = robust.mad(data)

dataMz = stats.norm.ppf(.75)*(data-dataMed) / dataMAD

# plot the data

fig,ax = plt.subplots(2,1,figsize=(8,6))

ax[0].plot(data,'k^',markerfacecolor='w',markersize=12)

ax[0].set_xticks([])

ax[0].set_xlabel('Data index')

ax[0].set_ylabel('Orig. scale')

# then plot the zscores

ax[1].plot(dataMz,'k^',markerfacecolor='w',markersize=12)

ax[1].plot([0,N],[zscorethresh,zscorethresh],'r--')

ax[1].set_xlabel('Data index')

ax[1].set_ylabel('Median dev. units (Mz)')

plt.show()

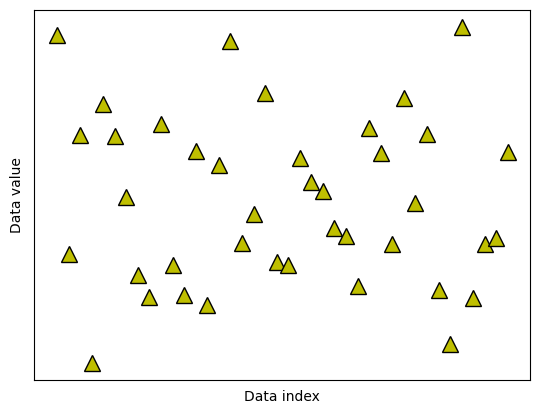

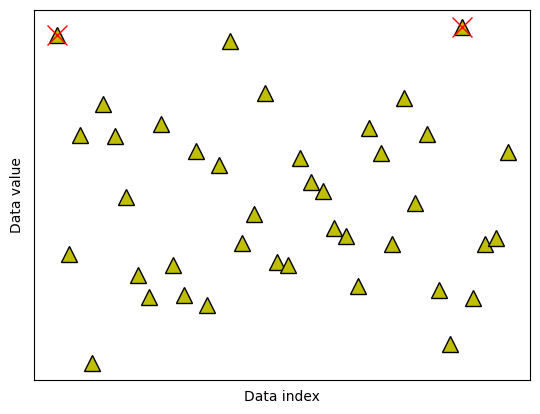

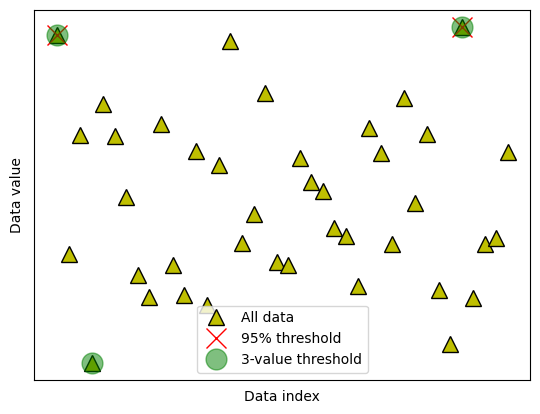

Data trimming to remove outliers

## create some data

N = 40

data = np.random.randn(N)

data[data<-2] = -data[data<-2]**2

data[data>2] = data[data>2]**2

# also need the mean-centered data

dataMC = data - np.mean(data)

# and plot them (not it ;) )

fig,ax = plt.subplots(1,1)

ax.plot(data,'k^',markerfacecolor='y',markersize=12)

ax.set_xticks([])

ax.set_yticks([])

ax.set_xlabel('Data index')

ax.set_ylabel('Data value')

plt.show()

## option 1: remove k% of the data

# percent of "extreme" data values to remove

trimPct = 5 # in percent

# identify the cut-off (note the abs() )

datacutoff = np.percentile(abs(dataMC),100-trimPct)

# find the exceedance data values

data2cut = np.where( abs(dataMC)>datacutoff )[0]

# and mark those off

ax.plot(data2cut,data[data2cut],'rx',markersize=15)

fig

## option 2: remove k most extreme values

# number of "extreme" data values to remove

k2remove = 3 # in number

# find the exceedance data values

datasortIdx = np.argsort(abs(dataMC),axis=0)[::-1]

data2cut = np.squeeze(datasortIdx[:k2remove])

# and mark those off

ax.plot(data2cut,data[data2cut],'go',markersize=15,alpha=.5)

# finally, add a legend

ax.legend(('All data','%g%% threshold'%(100-trimPct),'%g-value threshold'%k2remove))

fig

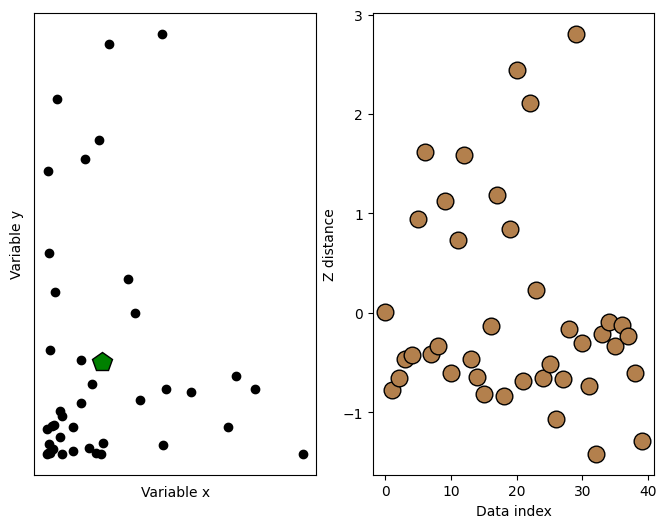

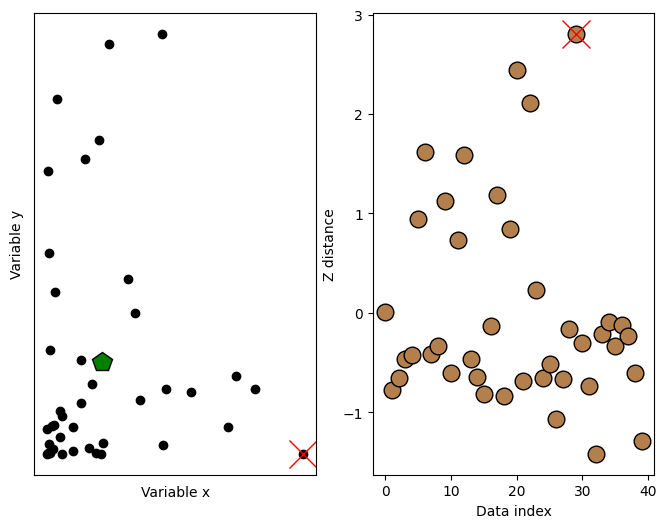

Euclidean distance for outlier removal

## create some data

N = 40

# two-dimensional data

d1 = np.exp(-abs(np.random.randn(N)*3))

d2 = np.exp(-abs(np.random.randn(N)*5))

datamean = [ np.mean(d1), np.mean(d2) ]

# compute distance of each point to the mean

ds = np.zeros(N)

for i in range(N):

ds[i] = np.sqrt( (d1[i]-datamean[0])**2 + (d2[i]-datamean[1])**2 )

# convert to z (don't need the original data)

ds = (ds-np.mean(ds)) / np.std(ds)

# plot the data

fig,ax = plt.subplots(1,2,figsize=(8,6))

ax[0].plot(d1,d2,'ko',markerfacecolor='k')

ax[0].set_xticks([])

ax[0].set_yticks([])

ax[0].set_xlabel('Variable x')

ax[0].set_ylabel('Variable y')

# plot the multivariate mean

ax[0].plot(datamean[0],datamean[1],'kp',markerfacecolor='g',markersize=15)

# then plot those distances

ax[1].plot(ds,'ko',markerfacecolor=[.7, .5, .3],markersize=12)

ax[1].set_xlabel('Data index')

ax[1].set_ylabel('Z distance')

Text(0, 0.5, 'Z distance')

## now for the thresholding

# threshold in standard deviation units

distanceThresh = 2.5

# find the offending points

oidx = np.where(ds>distanceThresh)[0]

print(oidx)

# and cross those out

ax[1].plot(oidx,ds[oidx],'x',color='r',markersize=20)

ax[0].plot(d1[oidx],d2[oidx],'x',color='r',markersize=20)

fig

[29]

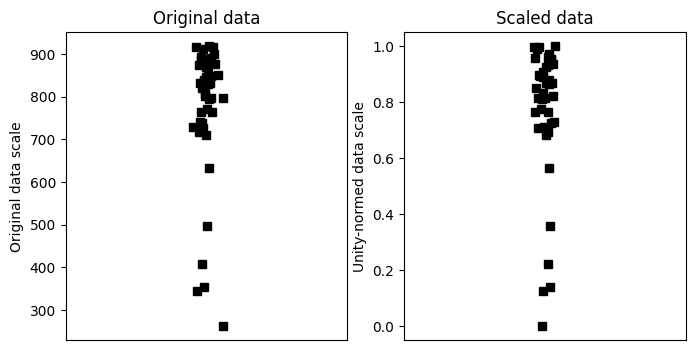

Min-max scaling

## create some data

N = 42

data = np.log(np.random.rand(N))*234 + 934

# get min and max

dataMin = min(data)

dataMax = max(data)

# now min-max scale

dataS = (data-dataMin) / (dataMax-dataMin)

# now plot

fig,ax = plt.subplots(1,2,figsize=(8,4))

ax[0].plot(1+np.random.randn(N)/20,data,'ks')

ax[0].set_xlim([0,2])

ax[0].set_xticks([])

ax[0].set_ylabel('Original data scale')

ax[0].set_title('Original data')

ax[1].plot(1+np.random.randn(N)/20,dataS,'ks')

ax[1].set_xlim([0,2])

ax[1].set_xticks([])

ax[1].set_ylabel('Unity-normed data scale')

ax[1].set_title('Scaled data')

plt.show()

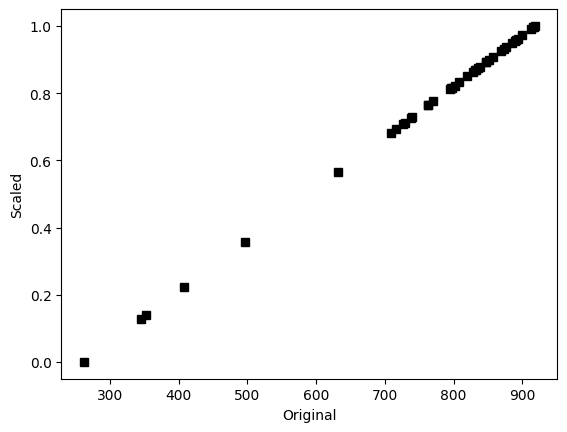

## show that scaling doesn't affect the relative values

plt.plot(data,dataS,'ks')

plt.xlabel('Original')

plt.ylabel('Scaled')

plt.show()

## any abitrary data range

# step 1 is to [0,1] normalize as above

# step 2:

newMin = 4

newMax = 8.7

dataSS = dataS*(newMax-newMin) + newMin

# test it!

print([min(dataSS), max(dataSS)])

[4.0, 8.7]

Probability theory

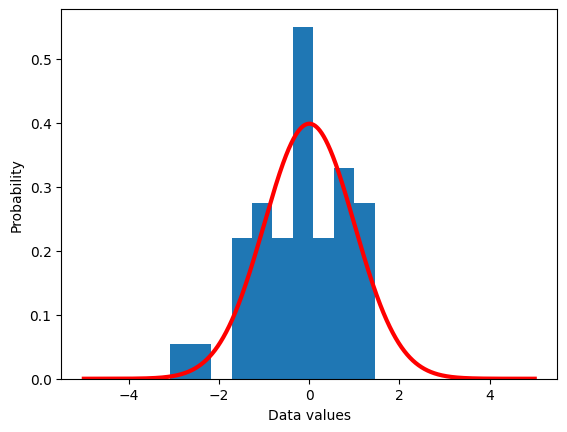

Sampling variability

## a theoretical normal distribution

x = np.linspace(-5,5,10101)

theoNormDist = stats.norm.pdf(x)

# (normalize to pdf)

# theoNormDist = theoNormDist*np.mean(np.diff(x))

# now for our experiment

numSamples = 40

# initialize

sampledata = np.zeros(numSamples)

# run the experiment!

for expi in range(numSamples):

sampledata[expi] = np.random.randn()

# show the results

plt.hist(sampledata,density=True)

plt.plot(x,theoNormDist,'r',linewidth=3)

plt.xlabel('Data values')

plt.ylabel('Probability')

plt.show()

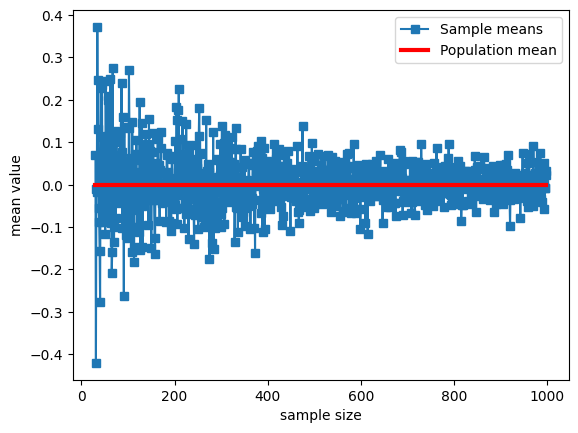

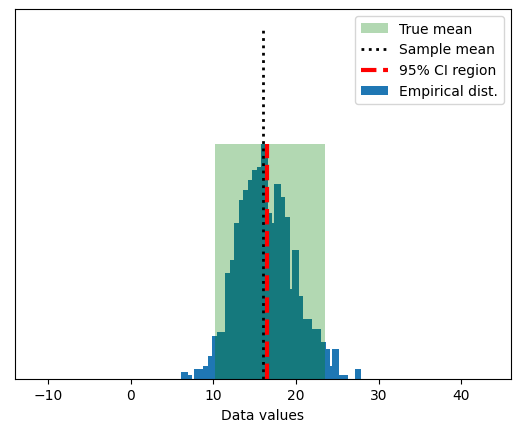

## show the mean of samples of a known distribution

# generate population data with known mean

populationN = 1000000

population = np.random.randn(populationN)

population = population - np.mean(population) # demean

# now we draw a random sample from that population

samplesize = 30

# the random indices to select from the population

sampleidx = np.random.randint(0,populationN,samplesize)

samplemean = np.mean(population[ sampleidx ])

### how does the sample mean compare to the population mean?

print(samplemean)

-0.015928841030912758

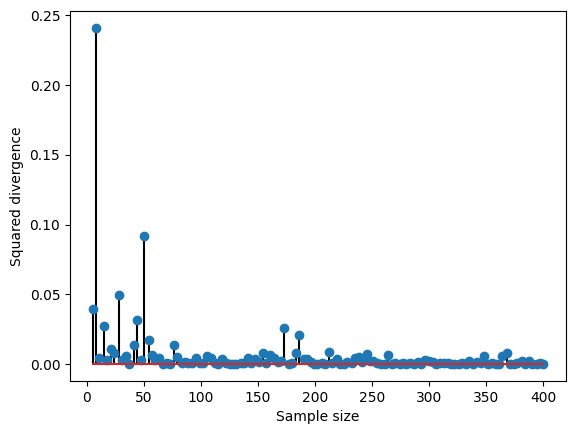

## repeat for different sample sizes

samplesizes = np.arange(30,1000)

samplemeans = np.zeros(len(samplesizes))

for sampi in range(len(samplesizes)):

# nearly the same code as above

sampleidx = np.random.randint(0,populationN,samplesizes[sampi])

samplemeans[sampi] = np.mean(population[ sampleidx ])

# show the results!

plt.plot(samplesizes,samplemeans,'s-')

plt.plot(samplesizes[[0,-1]],[np.mean(population),np.mean(population)],'r',linewidth=3)

plt.xlabel('sample size')

plt.ylabel('mean value')

plt.legend(('Sample means','Population mean'))

plt.show()

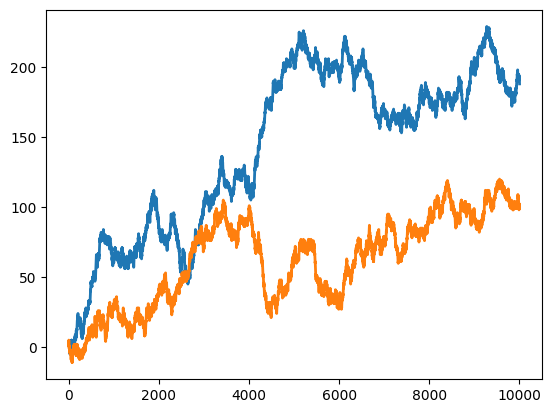

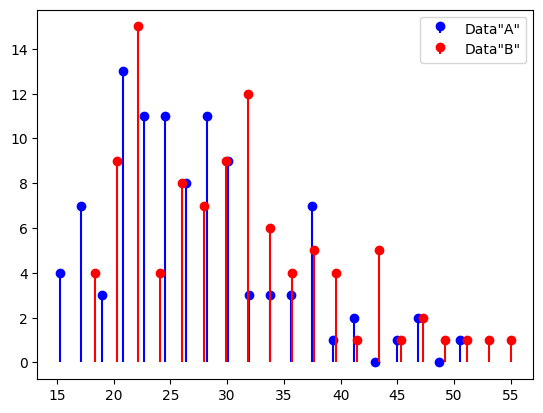

Compute probability mass functions

## compute empirical probability function

# continous signal (technically discrete!)

N = 10004

datats1 = np.cumsum(np.sign(np.random.randn(N)))

datats2 = np.cumsum(np.sign(np.random.randn(N)))

# let's see what they look like

plt.plot(np.arange(N),datats1,linewidth=2)

plt.plot(np.arange(N),datats2,linewidth=2)

plt.show()

# discretize using histograms

nbins = 50

y,x = np.histogram(datats1,nbins)

x1 = (x[1:]+x[:-1])/2

y1 = y/sum(y)

y,x = np.histogram(datats2,nbins)

x2 = (x[1:]+x[:-1])/2

y2 = y/sum(y)

plt.plot(x1,y1, x2,y2,linewidth=3)

plt.legend(('ts1','ts2'))

plt.xlabel('Data value')

plt.ylabel('Probability')

plt.show()

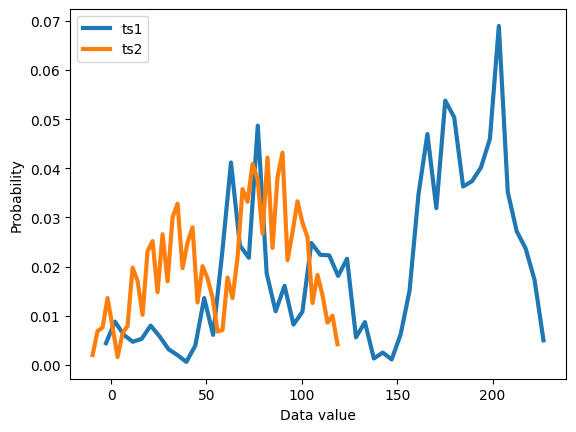

The law of large numbers

## example with rolling a die

# die probabilities (weighted)

f1 = 2/8

f2 = 2/8

f3 = 1/8

f4 = 1/8

f5 = 1/8

f6 = 1/8

# confirm sum to 1

print(f1+f2+f3+f4+f5+f6)

# expected value

expval = 1*f1 + 2*f2 + 3*f3 + 4*f4 + 5*f5 + 6*f6

# generate "population"

population = [ 1, 1, 2, 2, 3, 4, 5, 6 ]

for i in range(20):

population = np.hstack((population,population))

nPop = len(population)

# draw sample of 8 rolls

sample = np.random.choice(population,8)

1.0

## experiment: draw larger and larger samples

k = 5000 # maximum number of samples

sampleAve = np.zeros(k)

for i in range(k):

idx = np.floor(np.random.rand(i+1)*nPop)

sampleAve[i] = np.mean( population[idx.astype(int)] )

plt.plot(sampleAve,'k')

plt.plot([1,k],[expval,expval],'r',linewidth=4)

plt.xlabel('Number of samples')

plt.ylabel('Value')

plt.ylim([expval-1, expval+1])

plt.legend(('Sample average','expected value'))

# mean of samples converges to population estimate quickly:

print( np.mean(sampleAve) )

print( np.mean(sampleAve[:9]) )

2.9998251177888515

2.8296737213403884

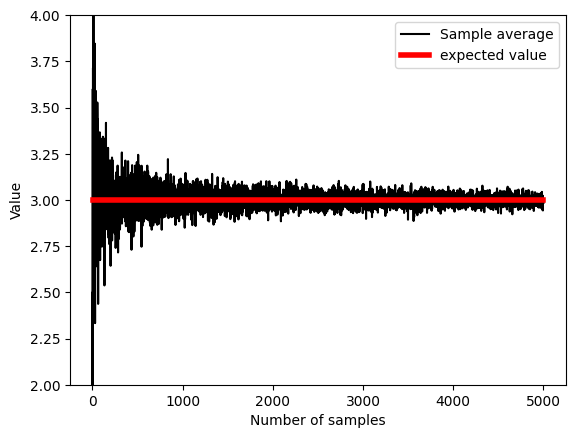

## Another example from a previous lecture (sampleVariability) (slightly adapted)

# generate population data with known mean

populationN = 1000000

population = np.random.randn(populationN)

population = population - np.mean(population) # demean

# get means of samples

samplesize = 30

numberOfExps = 500

samplemeans = np.zeros(numberOfExps)

for expi in range(numberOfExps):

# get a sample and compute its mean

sampleidx = np.random.randint(0,populationN,samplesize)

samplemeans[expi] = np.mean(population[ sampleidx ])

# show the results!

fig,ax = plt.subplots(2,1,figsize=(4,6))

ax[0].plot(samplemeans,'s-')

ax[0].plot([0,numberOfExps],[np.mean(population),np.mean(population)],'r',linewidth=3)

ax[0].set_xlabel('Experiment number')

ax[0].set_ylabel('mean value')

ax[0].legend(('Sample means','Population mean'))

ax[1].plot(np.cumsum(samplemeans) / np.arange(1,numberOfExps+1),'s-')

ax[1].plot([0,numberOfExps],[np.mean(population),np.mean(population)],'r',linewidth=3)

ax[1].set_xlabel('Experiment number')

ax[1].set_ylabel('mean value')

ax[1].legend(('Sample means','Population mean'))

plt.show()

## some foreshadowing...

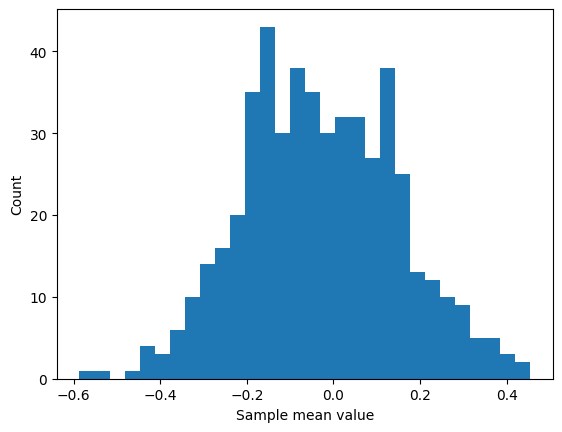

plt.hist(samplemeans,30)

plt.xlabel('Sample mean value')

plt.ylabel('Count')

plt.show()

Conditional probability

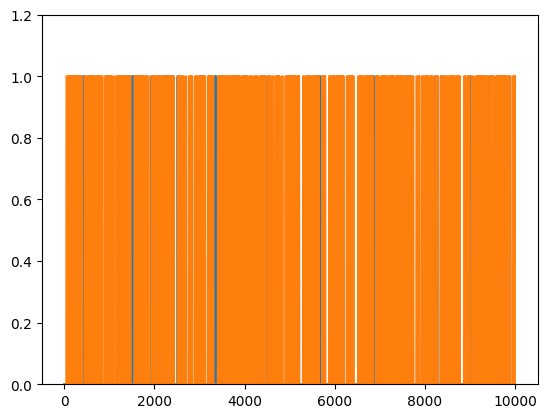

## generate two long-spike time series

N = 10000

spikeDur = 10 # a.u. but must be an even number

spikeNumA = .01 # in proportion of total number of points

spikeNumB = .05 # in proportion of total number of points

# initialize to zeros

spike_tsA = np.zeros(N)

spike_tsB = np.zeros(N)

### populate time series A

spiketimesA = np.random.randint(0,N,int(N*spikeNumA))

# flesh out spikes (loop per spike)

for spikei in range(len(spiketimesA)):

# find boundaries

bnd_pre = int( max(0,spiketimesA[spikei]-spikeDur/2) )

bnd_pst = int( min(N,spiketimesA[spikei]+spikeDur/2) )

# fill in with ones

spike_tsA[bnd_pre:bnd_pst] = 1

# ### repeat for time series 2

spiketimesB = np.random.randint(0,N,int(N*spikeNumB))

# spiketimesB[:len(spiketimesA)] = spiketimesA # induce strong conditional probability

# flesh out spikes (loop per spike)

for spikei in range(len(spiketimesB)):

# find boundaries

bnd_pre = int( max(0,spiketimesB[spikei]-spikeDur/2) )

bnd_pst = int( min(N,spiketimesB[spikei]+spikeDur/2) )

# fill in with ones

spike_tsB[bnd_pre:bnd_pst] = 1

## let's see what they look like

plt.plot(range(N),spike_tsA, range(N),spike_tsB)

plt.ylim([0,1.2])

# plt.xlim([2000,2500])

plt.show()

## compute their probabilities and intersection

# probabilities

probA = sum(spike_tsA==1) / N

probB = np.mean(spike_tsB)

# joint probability

probAB = np.mean(spike_tsA+spike_tsB==2)

print(probA,probB,probAB)

0.0956 0.3871 0.0432

## compute the conditional probabilities

# p(A|B)

pAgivenB = probAB/probB

# p(B|A)

pBgivenA = probAB/probA

# print a little report

print('P(A) = %g'%probA)

print('P(A|B) = %g'%pAgivenB)

print('P(B) = %g'%probB)

print('P(B|A) = %g'%pBgivenA)

P(A) = 0.0956

P(A|B) = 0.111599

P(B) = 0.3871

P(B|A) = 0.451883

Compute probabilities

## the basic formula

# counts of the different events

c = np.array([ 1, 2, 4, 3 ])

# convert to probability (%)

prob = 100*c / np.sum(c)

print(prob)

[10. 20. 40. 30.]

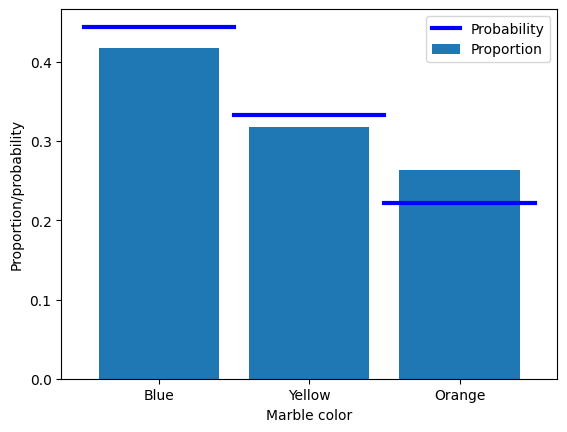

## the example of drawing marbles from a jar

# colored marble counts

blue = 40

yellow = 30

orange = 20

totalMarbs = blue + yellow + orange

# put them all in a jar

jar = np.hstack((1*np.ones(blue),2*np.ones(yellow),3*np.ones(orange)))

# now we draw 500 marbles (with replacement)

numDraws = 500

drawColors = np.zeros(numDraws)

for drawi in range(numDraws):

# generate a random integer to draw

randmarble = int(np.random.rand()*len(jar))

# store the color of that marble

drawColors[drawi] = jar[randmarble]

# now we need to know the proportion of colors drawn

propBlue = sum(drawColors==1) / numDraws

propYell = sum(drawColors==2) / numDraws

propOran = sum(drawColors==3) / numDraws

# plot those against the theoretical probability

plt.bar([1,2,3],[ propBlue, propYell, propOran ],label='Proportion')

plt.plot([0.5, 1.5],[blue/totalMarbs, blue/totalMarbs],'b',linewidth=3,label='Probability')

plt.plot([1.5, 2.5],[yellow/totalMarbs,yellow/totalMarbs],'b',linewidth=3)

plt.plot([2.5, 3.5],[orange/totalMarbs,orange/totalMarbs],'b',linewidth=3)

plt.xticks([1,2,3],labels=('Blue','Yellow','Orange'))

plt.xlabel('Marble color')

plt.ylabel('Proportion/probability')

plt.legend()

plt.show()

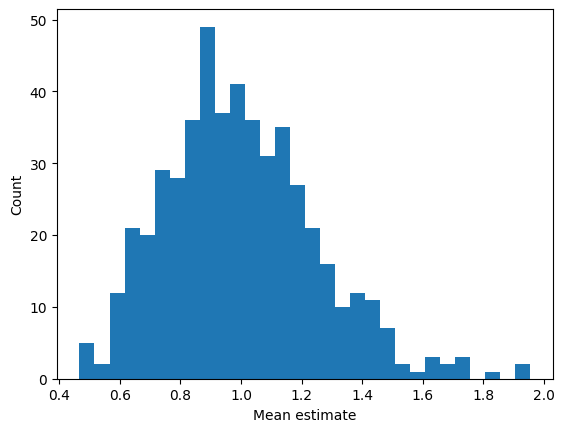

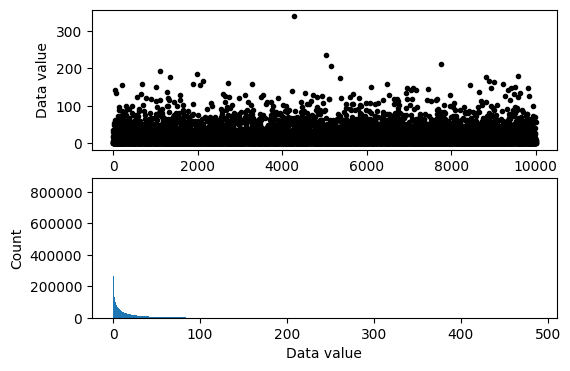

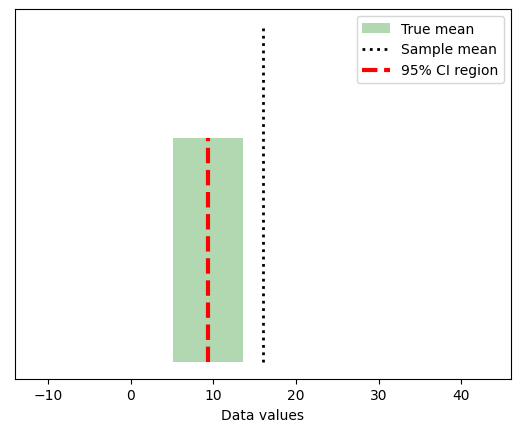

Central limit theorem in action

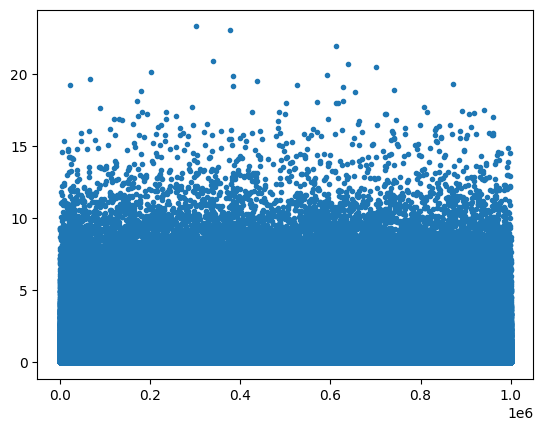

## create data from a power-law distribution

# data

N = 1000000

data = np.random.randn(N)**2

# alternative data

# data = np.sin(np.linspace(0,10*np.pi,N))

# show the distribution

plt.plot(data,'.')

plt.show()

plt.hist(data,40)

plt.show()

## repeated samples of the mean

samplesize = 30

numberOfExps = 500

samplemeans = np.zeros(numberOfExps)

for expi in range(numberOfExps):

# get a sample and compute its mean

sampleidx = np.random.randint(0,N,samplesize)

samplemeans[expi] = np.mean(data[ sampleidx ])

# and show its distribution

plt.hist(samplemeans,30)

plt.xlabel('Mean estimate')

plt.ylabel('Count')

plt.show()

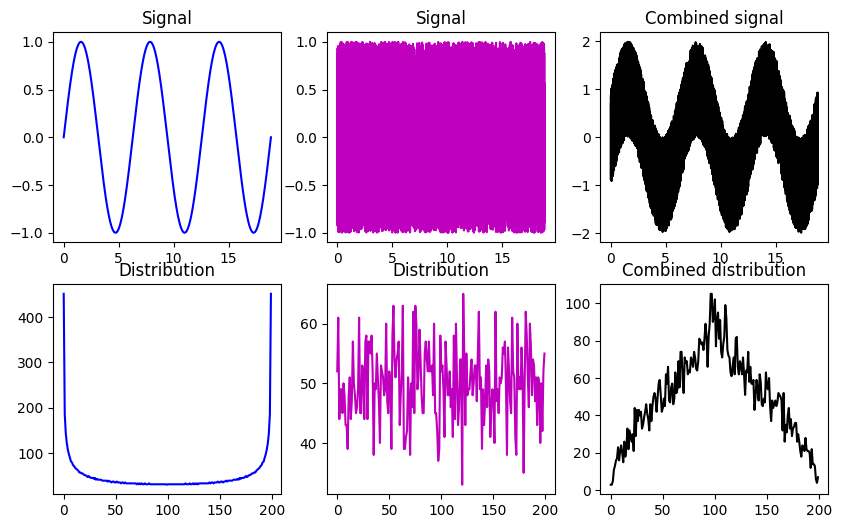

## linear mixtures

# create two datasets with non-Gaussian distributions

x = np.linspace(0,6*np.pi,10001)

s = np.sin(x)

u = 2*np.random.rand(len(x))-1

fig,ax = plt.subplots(2,3,figsize=(10,6))

ax[0,0].plot(x,s,'b')

ax[0,0].set_title('Signal')

y,xx = np.histogram(s,200)

ax[1,0].plot(y,'b')

ax[1,0].set_title('Distribution')

ax[0,1].plot(x,u,'m')

ax[0,1].set_title('Signal')

y,xx = np.histogram(u,200)

ax[1,1].plot(y,'m')

ax[1,1].set_title('Distribution')

ax[0,2].plot(x,s+u,'k')

ax[0,2].set_title('Combined signal')

y,xx = np.histogram(s+u,200)

ax[1,2].plot(y,'k')

ax[1,2].set_title('Combined distribution')

plt.show()

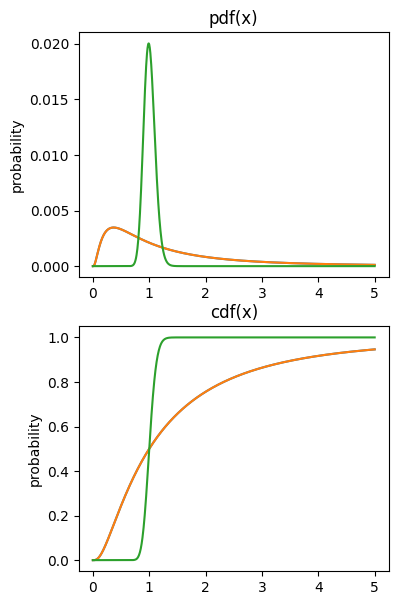

SECTION: Probability theory

cdf’s and pdf’s

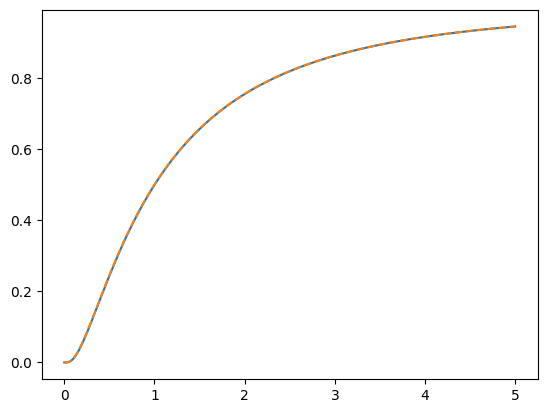

## example using log-normal distribution

# variable to evaluate the functions on

x = np.linspace(0,5,1001)

# note the function call pattern...

p1 = stats.lognorm.pdf(x,1)

c1 = stats.lognorm.cdf(x,1)

p2 = stats.lognorm.pdf(x,.1)

c2 = stats.lognorm.cdf(x,.1)

# draw the pdfs

fig,ax = plt.subplots(2,1,figsize=(4,7))

ax[0].plot(x,p1/sum(p1)) # question: why divide by sum here?

ax[0].plot(x,p1/sum(p1), x,p2/sum(p2))

ax[0].set_ylabel('probability')

ax[0].set_title('pdf(x)')

# draw the cdfs

ax[1].plot(x,c1)

ax[1].plot(x,c1, x,c2)

ax[1].set_ylabel('probability')

ax[1].set_title('cdf(x)')

plt.show()

## computing the cdf from the pdf

# compute the cdf

c1x = np.cumsum( p1*(x[1]-x[0]) )

plt.plot(x,c1)

plt.plot(x,c1x,'--')

plt.show()

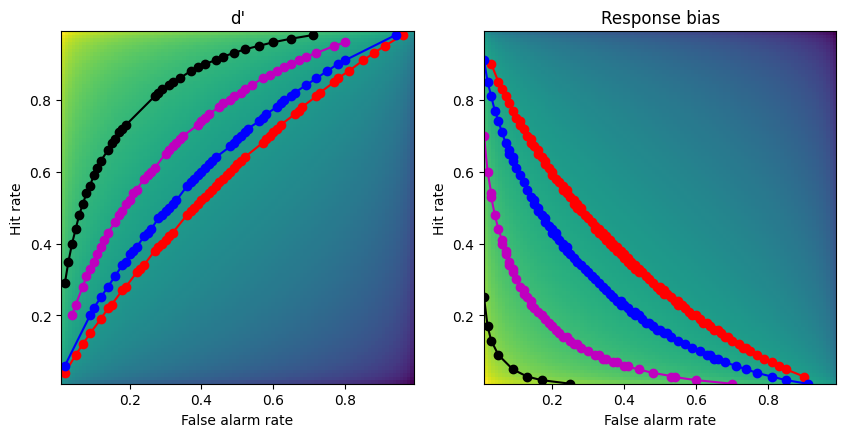

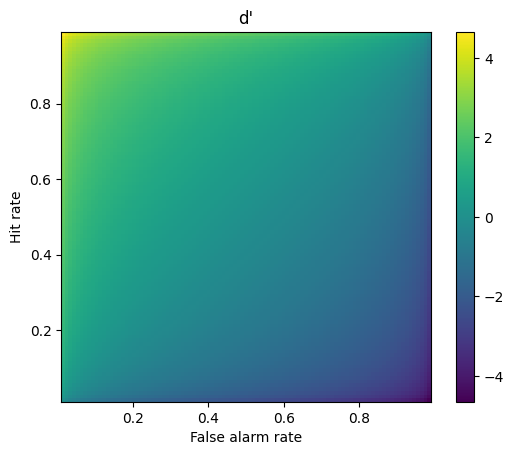

Signal detection theory

ROC curves

## first, re-create the dp and rb matrices from previous lectures

x = np.arange(.01,1,.01)

dp = np.tile(stats.norm.ppf(x),(99,1)).T - np.tile(stats.norm.ppf(x),(99,1))

rb = -( np.tile(stats.norm.ppf(x),(99,1)).T + np.tile(stats.norm.ppf(x),(99,1)) )/2

## create the 2D bias spaces and plot their ROC curves

rb2plot = [.3, .5, .9, 1.5] # d'/bias levels

tol = .01 # tolerance for matching levels

colorz = 'rbmk'

# setup the figure

fig,ax = plt.subplots(1,2,figsize=(10,5))

# show the 2D spaces

ax[0].imshow(dp,extent=[x[0],x[-1],x[0],x[-1]],origin='lower')

ax[0].set_xlabel('False alarm rate')

ax[0].set_ylabel('Hit rate')

ax[0].set_title("d'")

ax[1].imshow(rb,extent=[x[0],x[-1],x[0],x[-1]],origin='lower')

ax[1].set_xlabel('False alarm rate')

ax[1].set_ylabel('Hit rate')

ax[1].set_title('Response bias')

### now draw the isocontours

for i in range(len(rb2plot)):

# find d' points

idx = np.where((dp>rb2plot[i]-tol) & (dp<rb2plot[i]+tol))

ax[0].plot(x[idx[1]],x[idx[0]],'o-',color=colorz[i])

# find bias points

idx = np.where((rb>rb2plot[i]-tol) & (rb<rb2plot[i]+tol))

ax[1].plot(x[idx[1]],x[idx[0]],'o-',color=colorz[i])

plt.show()

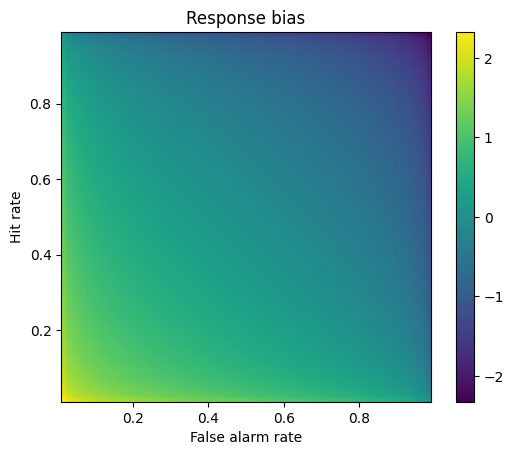

Response bias

## example from the slides

# step 1

hitP = 22/30

faP = 3/30

# step 2

hitZ = stats.norm.ppf(hitP)

faZ = stats.norm.ppf(faP)

# step 3

respBias = -(hitZ+faZ)/2

print(respBias)

0.32931292116725636

## 2D bias space

# response probabilities

x = np.arange(.01,1,.01)

# generate the space using tile expansion

rb = -( np.tile(stats.norm.ppf(x),(99,1)).T + np.tile(stats.norm.ppf(x),(99,1)) )/2

# show the 2D response bias space

plt.imshow(rb,extent=[x[0],x[-1],x[0],x[-1]],origin='lower')

plt.xlabel('False alarm rate')

plt.ylabel('Hit rate')

plt.title('Response bias')

plt.colorbar()

plt.show()

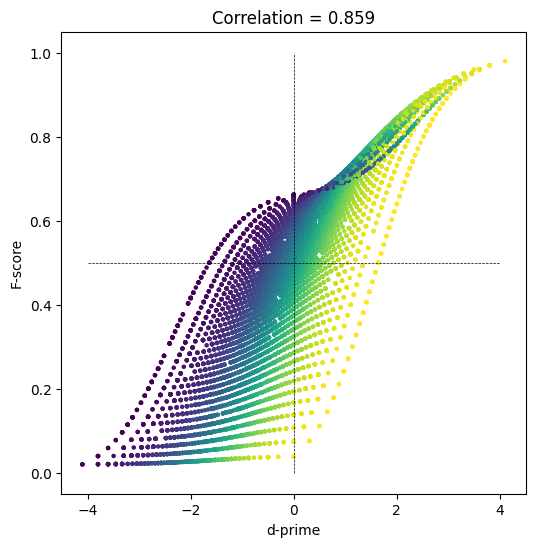

F-score

## run experiment

# number of 'trials' in the experiment

N = 50 # actual trials is 2N

# number of experiment repetitions

numExps = 10000

# initialize

Fscores = np.zeros(numExps)

dPrimes = np.zeros(numExps)

specificity = np.zeros(numExps)

### run the experiment!

for expi in range(numExps):

# generate data

H = np.random.randint(1,N) # hits

M = N-H # misses

CR = np.random.randint(1,N) # correct rejections

FA = N-CR # false alarms

# Fscore

Fscores[expi] = H / (H+(FA+M)/2)

# specificity

specificity[expi] = CR/(CR+FA)

# d'

dPrimes[expi] = stats.norm.ppf(H/N) - stats.norm.ppf(FA/N)

# not used here...

precision = H/(H+FA)

recall = H/(H+M)

## let's see how they relate to each other!

fig = plt.subplots(1,figsize=(6,6))

plt.scatter(dPrimes,Fscores,s=5,c=specificity)

plt.plot([-4,4],[.5,.5],'k--',linewidth=.5)

plt.plot([0,0],[0,1],'k--',linewidth=.5)

plt.xlabel('d-prime')

plt.ylabel('F-score')

plt.title('Correlation = %g' %np.round(np.corrcoef(Fscores,dPrimes)[1,0],3))

plt.show()

d-prime

## example from the slides

# step 1

hitP = 22/30

faP = 3/30

# step 2

hitZ = stats.norm.ppf(hitP)

faZ = stats.norm.ppf(faP)

# step 3

dPrime = hitZ-faZ

print(dPrime)

1.9044772887546881

## failure scenarios and their resolutions

hitZ = stats.norm.ppf(0/30)

faZ = stats.norm.ppf(22/30)

print(hitZ-faZ)

-inf

## 2D d' space

# response probabilities

x = np.arange(.01,1,.01)

# generate the space using tile expansion

dp = np.tile(stats.norm.ppf(x),(99,1)).T - np.tile(stats.norm.ppf(x),(99,1))

# show the 2D d' space

plt.imshow(dp,extent=[x[0],x[-1],x[0],x[-1]],origin='lower')

plt.xlabel('False alarm rate')

plt.ylabel('Hit rate')

plt.title("d'")

plt.colorbar()

plt.show()

Regression

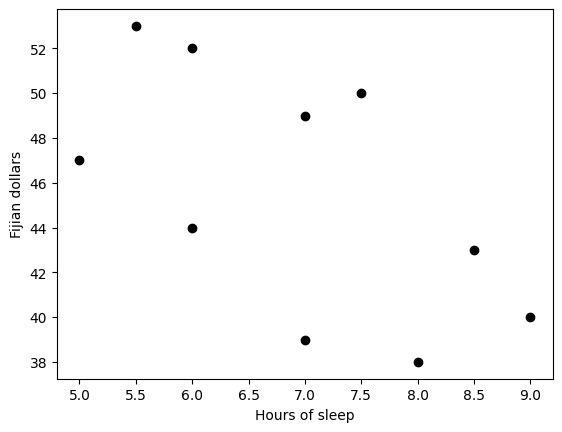

Simple regression

## example: effects of sleep on food spending

sleepHours = [5, 5.5, 6, 6, 7, 7, 7.5, 8, 8.5, 9]

dollars = [47, 53, 52, 44, 39, 49, 50, 38, 43, 40]

# start by showing the data

plt.plot(sleepHours,dollars,'ko',markerfacecolor='k')

plt.xlabel('Hours of sleep')

plt.ylabel('Fijian dollars')

plt.show()

## "manual" regression via least-squares fitting

# create the design matrix

desmat = np.vstack((np.ones(10),sleepHours)).T

print(desmat)

# compute the beta parameters (regression coefficients)

beta = np.linalg.lstsq(desmat,dollars,rcond=None)[0]

print(beta)

# predicted data values

yHat = desmat@beta

[[1. 5. ]

[1. 5.5]

[1. 6. ]

[1. 6. ]

[1. 7. ]

[1. 7. ]

[1. 7.5]

[1. 8. ]

[1. 8.5]

[1. 9. ]]

[62.84737679 -2.49602544]

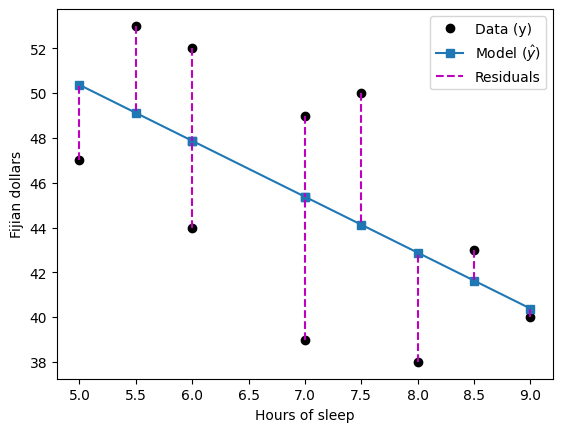

## show the predicted results on top of the "real" data

# show previous data

plt.plot(sleepHours,dollars,'ko',markerfacecolor='k')

# predicted results

plt.plot(sleepHours,yHat,'s-')

# show the residuals

for i in range(10):

plt.plot([sleepHours[i],sleepHours[i]],[dollars[i], yHat[i]],'m--')

plt.legend(('Data (y)','Model ($\^{y}$)','Residuals'))

plt.xlabel('Hours of sleep')

plt.ylabel('Fijian dollars')

plt.show()

## now with scipy

slope,intercept,r,p,std_err = stats.linregress(sleepHours,dollars)

print(intercept,slope)

print(beta)

62.84737678855326 -2.4960254372019075

[62.84737679 -2.49602544]

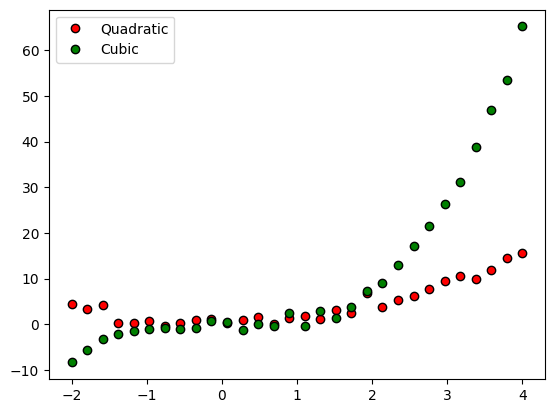

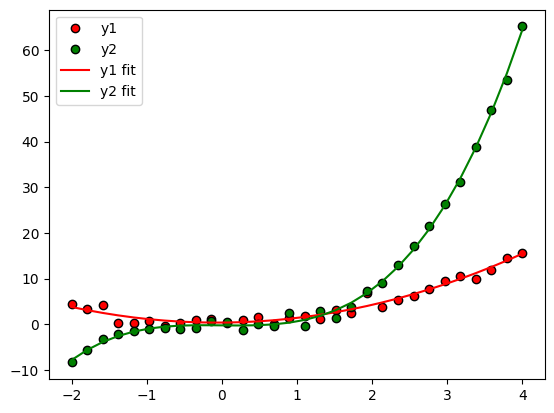

Polynomial regression

## generate the data

n = 30

x = np.linspace(-2,4,n)

y1 = x**2 + np.random.randn(n)

y2 = x**3 + np.random.randn(n)

# plot the data

plt.plot(x,y1,'ko',markerfacecolor='r')

plt.plot(x,y2,'ko',markerfacecolor='g')

plt.legend(('Quadratic','Cubic'))

plt.show()

## now for a polynomial fit

# for y1

pterms = np.polyfit(x,y1,2)

yHat1 = np.polyval(pterms,x)

# for y2

pterms = np.polyfit(x,y2,3)

yHat2 = np.polyval(pterms,x)

# and all the plotting

plt.plot(x,y1,'ko',markerfacecolor='r',label='y1')

plt.plot(x,y2,'ko',markerfacecolor='g',label='y2')

plt.plot(x,yHat1,'r',label='y1 fit')

plt.plot(x,yHat2,'g',label='y2 fit')

plt.legend()

plt.show()

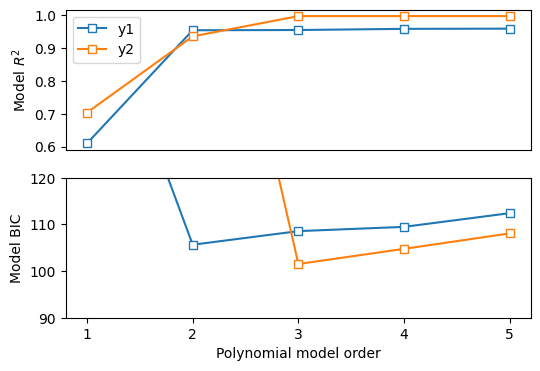

# compute R2

# compute R2 for several polynomial orders

orders = np.arange(1,6)

# output matrices

r2 = np.zeros((2,len(orders)))

sse = np.zeros((2,len(orders)))

# the loop!

for oi in range(len(orders)):

# fit the model with oi terms

pterms = np.polyfit(x,y1,orders[oi])

yHat = np.polyval(pterms,x)

# compute R2

ss_eta = sum((y1-yHat)**2) # numerator

ss_tot = sum((y1-np.mean(y1))**2) # denominator

r2[0,oi] = 1 - ss_eta/ss_tot # R^2

sse[0,oi] = ss_eta # store just the SSe for model comparison later

### repeat for y2

pterms = np.polyfit(x,y2,orders[oi])

yHat = np.polyval(pterms,x)

ss_eta = sum((y2-yHat)**2)

ss_tot = np.var(y2)*(n-1)

r2[1,oi] = 1 - ss_eta/ss_tot

sse[1,oi] = ss_eta

fig,ax = plt.subplots(2,1,figsize=(6,4))

# plot the R2 results

ax[0].plot(orders,r2[0,:],'s-',markerfacecolor='w')

ax[0].plot(orders,r2[1,:],'s-',markerfacecolor='w')

ax[0].set_ylabel('Model $R^2$')

ax[0].set_xticks([])

ax[0].legend(('y1','y2'))

# compute the Bayes Information Criterion

bic = n*np.log(sse) + orders*np.log(n)

ax[1].plot(orders,bic[0,:],'s-',markerfacecolor='w')

ax[1].plot(orders,bic[1,:],'s-',markerfacecolor='w')

ax[1].set_xlabel('Polynomial model order')

ax[1].set_xticks(range(1,6))

ax[1].set_ylabel('Model BIC')

# optional zoom

ax[1].set_ylim([90,120])

plt.show()

Multiple regression

import numpy as np

import matplotlib.pyplot as plt

import scipy.stats as stats

from sklearn.linear_model import LinearRegression

import statsmodels.api as sm

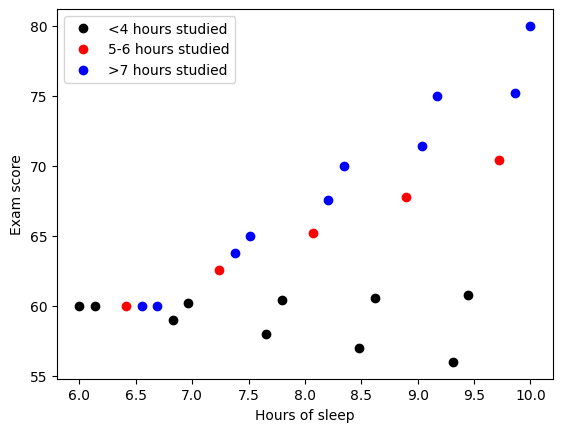

## example: effects of sleep and study hours on exam scores

### create the data

exam_scores = []

for ei in range(5):

exam_scores = np.hstack((exam_scores,60*np.ones(6)+np.linspace(-1,5,6)*ei))

hours_studied = np.tile(np.linspace(2,8,6),5)

ave_sleep_hrs = np.linspace(6,10,30)

## plot the data

### stratify by hours studied

# fewer than 4 hours studied

plotidx = hours_studied<4.1

plt.plot(ave_sleep_hrs[plotidx],exam_scores[plotidx],'ko',markerfacecolor='k')

# 5-6 hours studied

plotidx = np.logical_and(hours_studied>4.9, hours_studied<6.1)

plt.plot(ave_sleep_hrs[plotidx],exam_scores[plotidx],'ro',markerfacecolor='r')

# more than 6 hours

plotidx = hours_studied>6

plt.plot(ave_sleep_hrs[plotidx],exam_scores[plotidx],'bo',markerfacecolor='b')

plt.xlabel('Hours of sleep')

plt.ylabel('Exam score')

plt.legend(('<4 hours studied','5-6 hours studied','>7 hours studied'))

plt.show()

## multiple regression

# build the design matrix

desmat = np.vstack((np.ones((30,)),ave_sleep_hrs,hours_studied,ave_sleep_hrs*hours_studied)).T

multireg = sm.OLS(endog=exam_scores, exog=desmat).fit()

multireg.summary()

| Dep. Variable: | y | R-squared: | 0.993 |

|---|---|---|---|

| Model: | OLS | Adj. R-squared: | 0.992 |

| Method: | Least Squares | F-statistic: | 1182. |

| Date: | Sat, 12 Nov 2022 | Prob (F-statistic): | 6.74e-28 |

| Time: | 04:11:04 | Log-Likelihood: | -21.269 |

| No. Observations: | 30 | AIC: | 50.54 |

| Df Residuals: | 26 | BIC: | 56.14 |

| Df Model: | 3 | ||

| Covariance Type: | nonrobust |

| coef | std err | t | P>|t| | [0.025 | 0.975] | |

|---|---|---|---|---|---|---|

| const | 82.4315 | 1.700 | 48.491 | 0.000 | 78.937 | 85.926 |

| x1 | -3.4511 | 0.215 | -16.087 | 0.000 | -3.892 | -3.010 |

| x2 | -7.6663 | 0.321 | -23.916 | 0.000 | -8.325 | -7.007 |

| x3 | 1.1736 | 0.040 | 29.623 | 0.000 | 1.092 | 1.255 |

| Omnibus: | 10.899 | Durbin-Watson: | 1.069 |

|---|---|---|---|

| Prob(Omnibus): | 0.004 | Jarque-Bera (JB): | 3.273 |

| Skew: | -0.438 | Prob(JB): | 0.195 |

| Kurtosis: | 1.640 | Cond. No. | 821. |

Notes:

[1] Standard Errors assume that the covariance matrix of the errors is correctly specified.

# without the interaction term

multireg = sm.OLS(endog=exam_scores, exog=desmat[:,0:-1]).fit()

multireg.summary()

| Dep. Variable: | y | R-squared: | 0.747 |

|---|---|---|---|

| Model: | OLS | Adj. R-squared: | 0.728 |

| Method: | Least Squares | F-statistic: | 39.86 |

| Date: | Sat, 12 Nov 2022 | Prob (F-statistic): | 8.76e-09 |

| Time: | 04:11:16 | Log-Likelihood: | -74.492 |

| No. Observations: | 30 | AIC: | 155.0 |

| Df Residuals: | 27 | BIC: | 159.2 |

| Df Model: | 2 | ||

| Covariance Type: | nonrobust |

| coef | std err | t | P>|t| | [0.025 | 0.975] | |

|---|---|---|---|---|---|---|

| const | 36.0556 | 3.832 | 9.409 | 0.000 | 28.193 | 43.918 |

| x1 | 2.4167 | 0.477 | 5.071 | 0.000 | 1.439 | 3.395 |

| x2 | 1.7222 | 0.278 | 6.203 | 0.000 | 1.153 | 2.292 |

| Omnibus: | 0.189 | Durbin-Watson: | 1.000 |

|---|---|---|---|

| Prob(Omnibus): | 0.910 | Jarque-Bera (JB): | 0.004 |

| Skew: | 0.000 | Prob(JB): | 0.998 |

| Kurtosis: | 2.943 | Cond. No. | 66.6 |

Notes:

[1] Standard Errors assume that the covariance matrix of the errors is correctly specified.

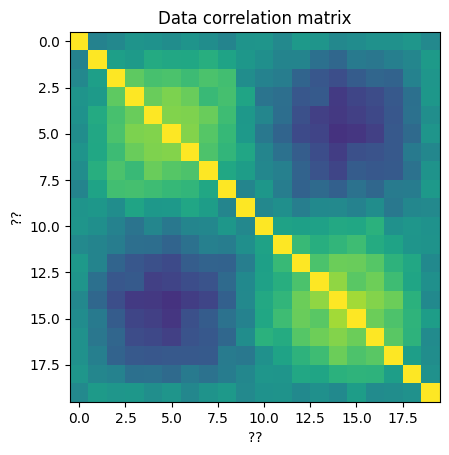

# inspect the correlations of the IVs

np.corrcoef(desmat[:,1:].T)

array([[1. , 0.19731231, 0.49270769],

[0.19731231, 1. , 0.94068915],

[0.49270769, 0.94068915, 1. ]])

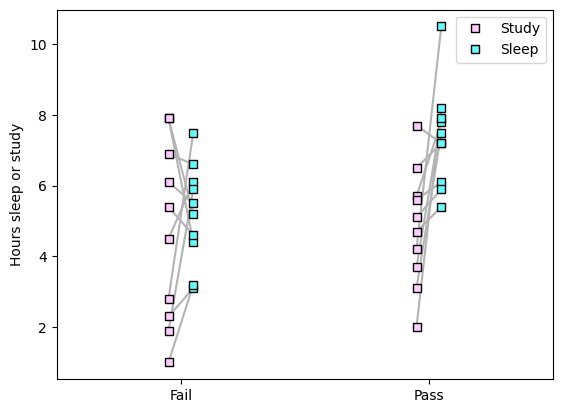

Logistic regression

import numpy as np

import matplotlib.pyplot as plt

from sklearn.linear_model import LogisticRegression

## generate the data

exam_outcome = [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1];

study_hours = [7.9, 7.9, 2.8, 5.4, 6.1, 4.5, 6.9, 2.3, 1.9, 1, 3.1, 5.7, 5.6, 4.7, 4.2, 2, 7.7, 6.5, 5.1, 3.7]

sleep_hours = [4.4, 5.2, 7.5, 4.6, 5.5, 6.1, 6.6, 3.1, 5.9, 3.2, 7.5, 7.8, 6.1, 5.4, 10.5, 8.2, 7.2, 7.2, 5.9, 7.9]

n = len(exam_outcome)

# and plot

for i in range(n):

plt.plot([exam_outcome[i]-.05, exam_outcome[i]+.05],[study_hours[i],sleep_hours[i]],color=[.7,.7,.7])

plt.plot(exam_outcome-.05*np.ones(n),study_hours,'ks',markerfacecolor=[1,.8,1],label='Study')

plt.plot(exam_outcome+.05*np.ones(n),sleep_hours,'ks',markerfacecolor=[.39,1,1],label='Sleep')

plt.xticks([0,1],labels=('Fail','Pass'))

plt.xlim([-.5,1.5])

plt.ylabel('Hours sleep or study')

plt.legend()

plt.show()

## now for the logistic regression

# create a model

logregmodel = LogisticRegression(solver='liblinear')#'newton-cg')#

# create the design matrix

desmat = np.vstack((study_hours,sleep_hours)).T

logregmodel.fit(desmat,np.array(exam_outcome))

print(logregmodel.intercept_)

print(logregmodel.coef_)

[-0.96510192]

[[-0.19445677 0.3361749 ]]

# compute predictions and accuracy

predvals = logregmodel.predict(desmat) # class labels

predvalsP = logregmodel.predict_proba(desmat) # probability values

print(predvals)

print(np.array(exam_outcome))

print(predvalsP)

logregmodel.score(desmat,np.array(exam_outcome))

[0 0 1 0 0 1 0 0 1 0 1 1 0 0 1 1 0 1 1 1]

[0 0 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1 1 1]

[[0.7353894 0.2646106 ]

[0.67987577 0.32012423]

[0.26664125 0.73335875]

[0.61509116 0.38490884]

[0.5750111 0.4249889 ]

[0.44756611 0.55243389]

[0.52201059 0.47798941]

[0.59150979 0.40849021]

[0.343246 0.656754 ]

[0.5209375 0.4790625 ]

[0.27820281 0.72179719]

[0.36617566 0.63382434]

[0.50084824 0.49915176]

[0.51592069 0.48407931]

[0.1482976 0.8517024 ]

[0.19740089 0.80259911]

[0.51048841 0.48951159]

[0.45229843 0.54770157]

[0.49335028 0.50664972]

[0.27464343 0.72535657]]

0.7

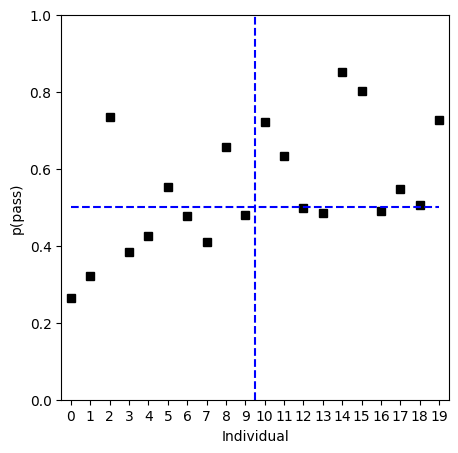

# plotting

fig,ax = plt.subplots(1,1,figsize=(5,5))

ax.plot(predvalsP[:,1],'ks')

ax.plot([0,19],[.5,.5],'b--')

ax.plot([9.5,9.5],[0,1],'b--')

ax.set_xticks(np.arange(20))

ax.set_xlabel('Individual')

ax.set_ylabel('p(pass)')

ax.set_xlim([-.5, 19.5])

ax.set_ylim([0,1])

plt.show()

Clustering and dimension-reduction

PCA

import numpy as np

import matplotlib.pyplot as plt

from sklearn.decomposition import PCA

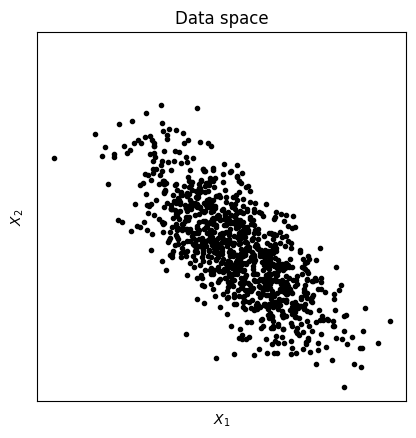

## Create the data

N = 1000

# data

x = np.array([ np.random.randn(N), .4*np.random.randn(N) ]).T

# rotation matrix

th = np.pi/4

R1 = [ [np.cos(th), -np.sin(th)], [np.sin(th), np.cos(th)] ]

# rotate data

y = x@np.array(R1)

axlim = [-1.1*max(abs(y.flatten())), 1.1*max(abs(y.flatten()))] # axis limits

# and plot

plt.plot(y[:,0],y[:,1],'k.')

plt.xticks([])

plt.yticks([])

plt.xlabel('$X_1$')

plt.ylabel('$X_2$')

plt.axis('square')

plt.title('Data space')

plt.show()

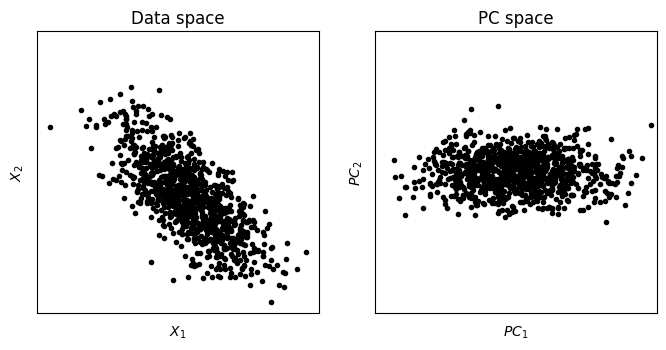

## now for PCA

# PCA using scikitlearn's function

pca = PCA().fit(y)

# get the PC scores

pcscores = pca.transform(y)

# and plot

fig,ax = plt.subplots(1,2,figsize=(8,4))

ax[0].plot(y[:,0],y[:,1],'k.')

ax[0].axis('square')

ax[0].set_xticks([])

ax[0].set_yticks([])

ax[1].set_xlim(axlim)

ax[1].set_ylim(axlim)

ax[0].set_xlabel('$X_1$')

ax[0].set_ylabel('$X_2$')

ax[0].set_title('Data space')

ax[1].plot(pcscores[:,0],pcscores[:,1],'k.')

ax[1].axis('square')

ax[1].set_xticks([])

ax[1].set_yticks([])

ax[1].set_xlim(axlim)

ax[1].set_ylim(axlim)

ax[1].set_xlabel('$PC_1$')

ax[1].set_ylabel('$PC_2$')

ax[1].set_title('PC space')

plt.show()

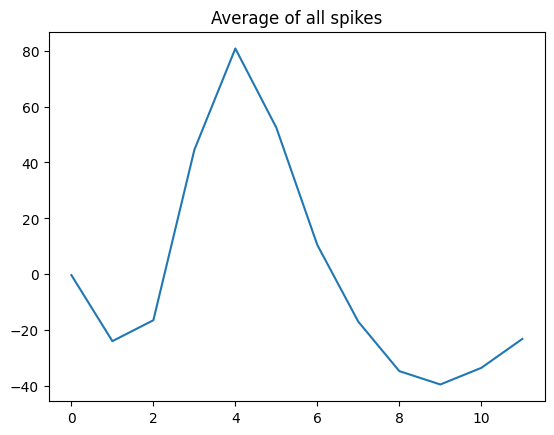

## for dimension reduction

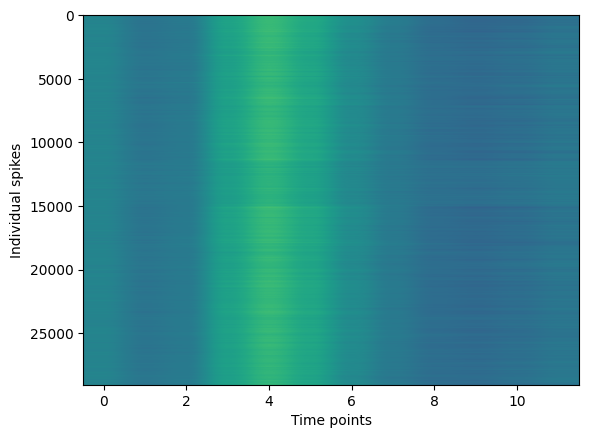

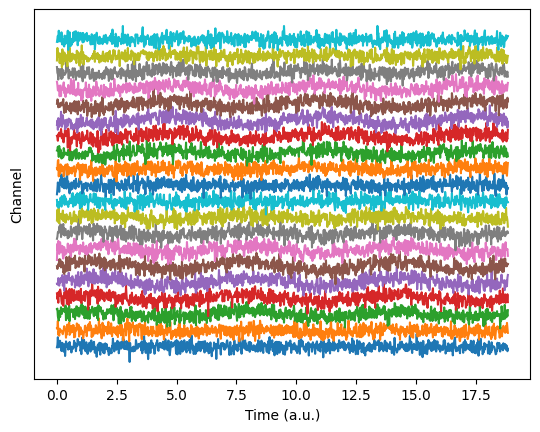

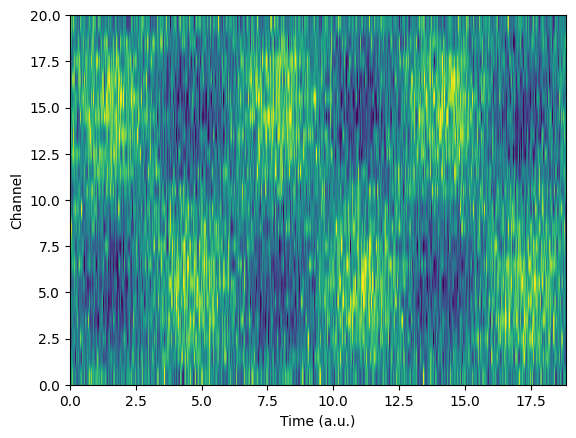

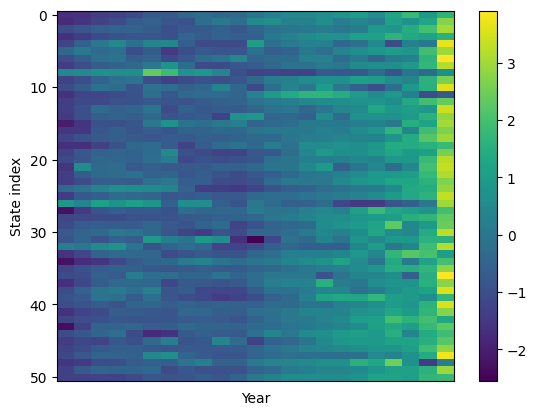

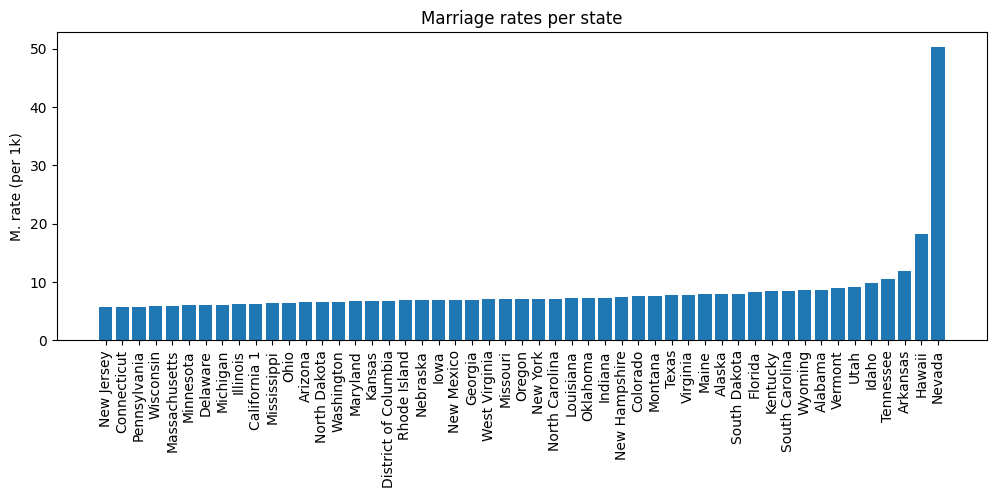

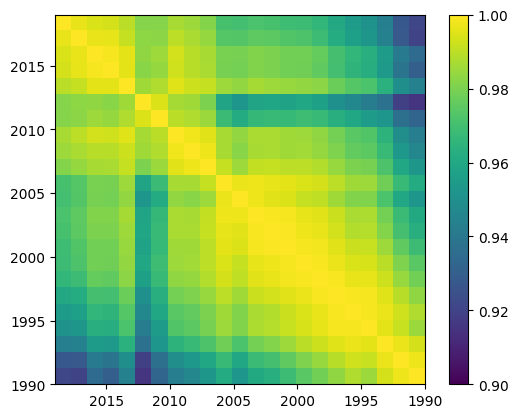

spikes = np.loadtxt('spikes.csv',delimiter=',')

# let's see it!

plt.plot(np.mean(spikes,axis=0))

plt.title('Average of all spikes')

plt.show()

plt.imshow(spikes,aspect='auto')

plt.xlabel('Time points')

plt.ylabel('Individual spikes')

plt.show()

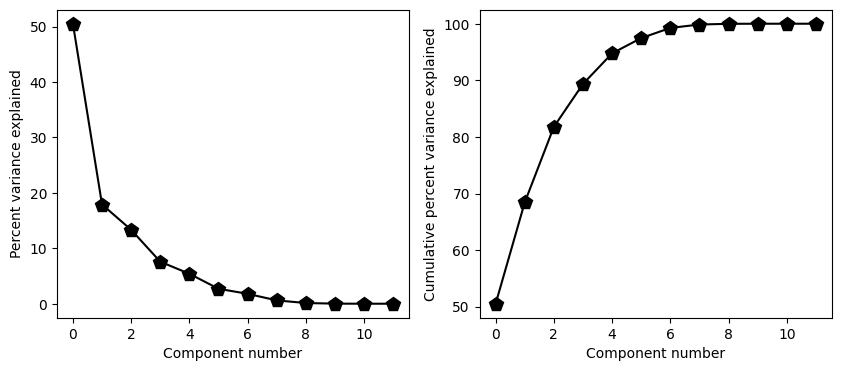

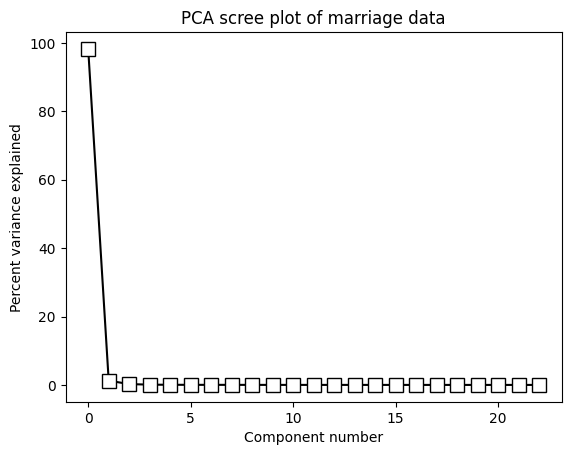

## pca

# PCA using scikitlearn's function

pca = PCA().fit(spikes)

# get the PC scores and the eigenspectrum

pcscores = pca.transform(spikes)

explVar = pca.explained_variance_

explVar = 100*explVar/sum(explVar) # convert to %total

coeffs = pca.components_

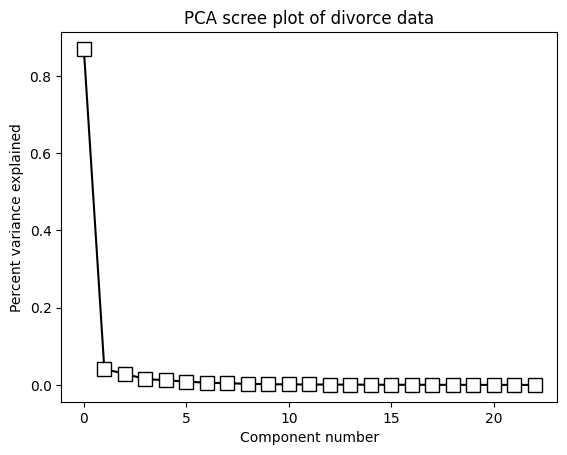

# show the scree plot (a.k.a. eigenspectrum)

fig,ax = plt.subplots(1,2,figsize=(10,4))

ax[0].plot(explVar,'kp-',markerfacecolor='k',markersize=10)

ax[0].set_xlabel('Component number')

ax[0].set_ylabel('Percent variance explained')

ax[1].plot(np.cumsum(explVar),'kp-',markerfacecolor='k',markersize=10)

ax[1].set_xlabel('Component number')

ax[1].set_ylabel('Cumulative percent variance explained')

plt.show()

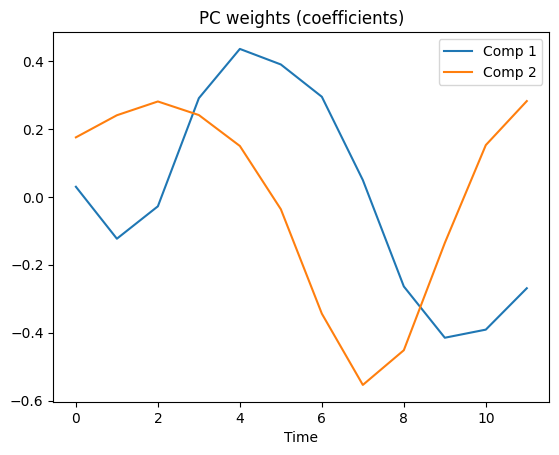

# now show the PC weights for the top two components

plt.plot(coeffs[0,:])

plt.plot(coeffs[1,:])

plt.xlabel('Time')

plt.legend(('Comp 1','Comp 2'))

plt.title('PC weights (coefficients)')

plt.show()

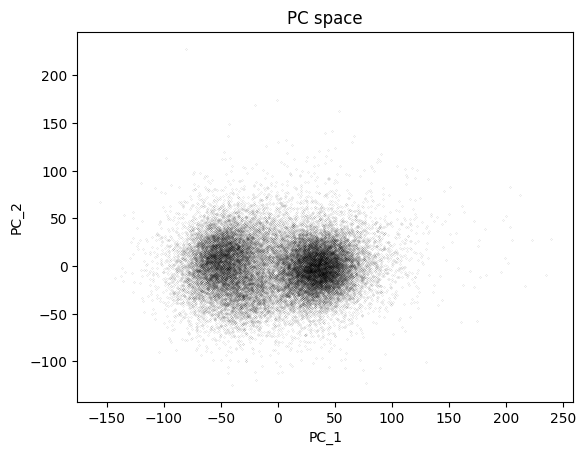

## finally, show the PC scores

plt.plot(pcscores[:,0],pcscores[:,1],'k.',markersize=.1)

plt.xlabel('PC_1')

plt.ylabel('PC_2')

plt.title('PC space')

plt.show()

K-nearest neighbor

import numpy as np

import matplotlib.pyplot as plt

from scipy import stats

from sklearn.neighbors import KNeighborsClassifier

## Create data

nPerClust = 50

# XY centroid locations

A = [ 1, 0 ]

B = [ -1, 0 ]

# generate data

a = [ A[0]+np.random.randn(nPerClust) , A[1]+np.random.randn(nPerClust) ]

b = [ B[0]+np.random.randn(nPerClust) , B[1]+np.random.randn(nPerClust) ]

# concatanate into a list

data = np.transpose( np.concatenate((a,b),axis=1) )

grouplabels = np.concatenate((np.zeros(nPerClust),np.ones(nPerClust)))

# group color assignment

groupcolors = 'br'

# show the data

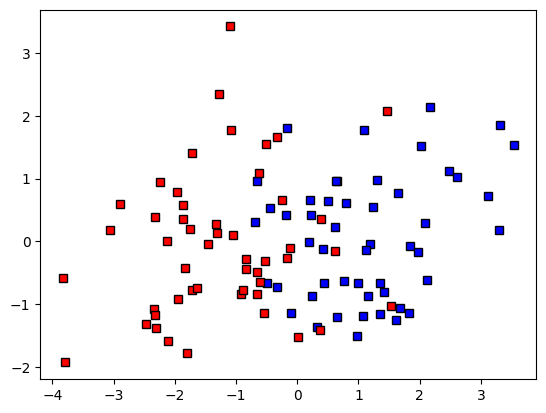

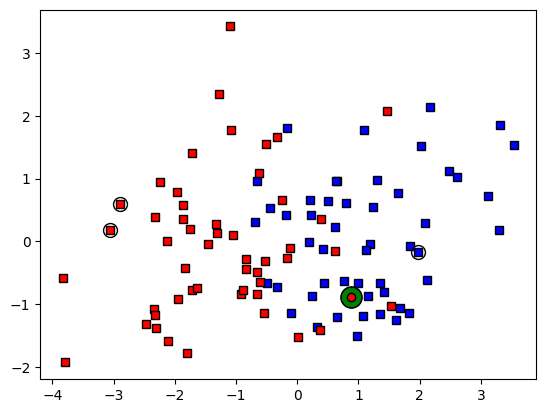

fig,ax = plt.subplots(1)

ax.plot(data[grouplabels==0,0],data[grouplabels==0,1],'ks',markerfacecolor=groupcolors[0])

ax.plot(data[grouplabels==1,0],data[grouplabels==1,1],'ks',markerfacecolor=groupcolors[1])

plt.show()

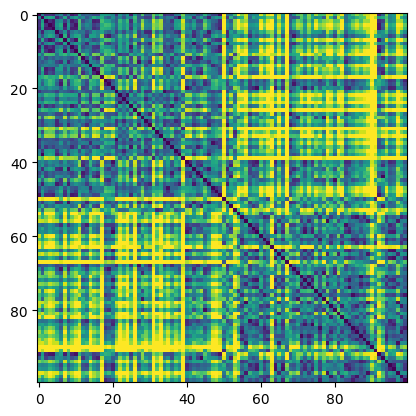

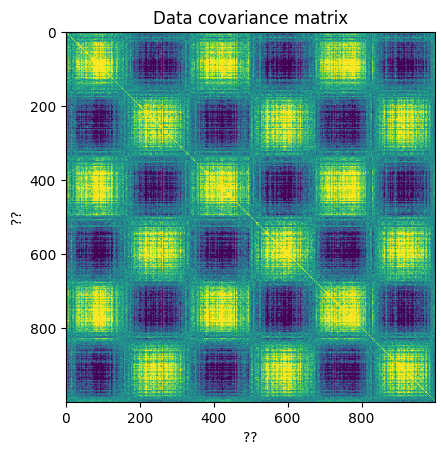

## compute distance matrix

# initialize

distmat = np.zeros((nPerClust*2,nPerClust*2))

# loop over elements

for i in range(nPerClust*2):

for j in range(nPerClust*2):

distmat[i,j] = np.sqrt( (data[i,0]-data[j,0])**2 + (data[i,1]-data[j,1])**2 )

plt.imshow(distmat,vmax=4)

plt.show()

matplotlib.lines.Line2D

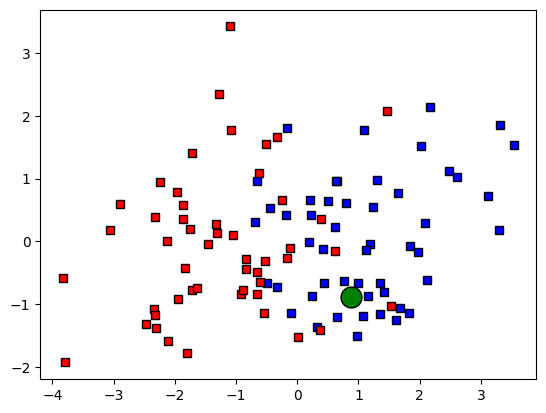

## create the new data point

# random new point

newpoint = 2*np.random.rand(2)-1

# and plot it

ax.plot(newpoint[0],newpoint[1],'ko',MarkerFaceColor='g',markersize=15)

fig

## create the new data point

# random new point

newpoint = 2*np.random.rand(2)-1

# and plot it

ax.plot(newpoint[0],newpoint[1],'ko',markerfacecolor='g',markersize=15)

fig

#plt.show()

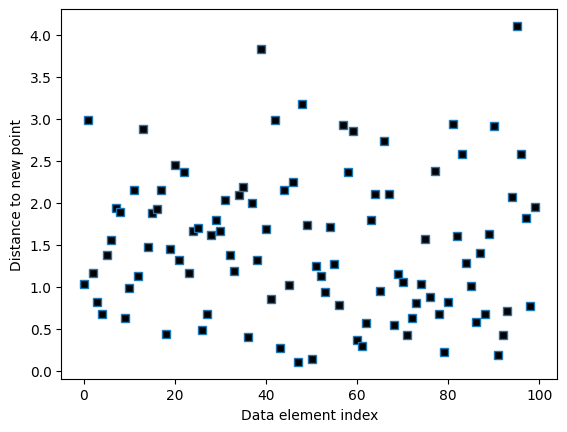

# compute distance vector

distvec = np.zeros(nPerClust*2)

for i in range(nPerClust*2):

distvec[i] = np.sqrt( (data[i,0]-newpoint[0])**2 + (data[i,1]-newpoint[1])**2 )

# show the distances

plt.plot(distvec,'s',markerfacecolor='k')

plt.xlabel('Data element index')

plt.ylabel('Distance to new point')

plt.show()

## now for the labeling

# k parameter

k = 3

# sort the distances

sortidx = np.argsort(distvec)

# find the group assignment of the nearest neighbors

print(grouplabels[sortidx[:k]])

whichgroup = int( np.median(grouplabels[sortidx[:k]]) )

print('New data belong to group ' + str(whichgroup))

# and re-plot

ax.plot(newpoint[0],newpoint[1],'ko',markerfacecolor='g',markersize=15)

ax.plot(newpoint[0],newpoint[1],'ko',markerfacecolor=groupcolors[whichgroup])

ax.plot(data[sortidx[:k],0],data[sortidx[:k],1],'ko',markersize=10,fillstyle='none')

fig

[0. 1. 1.]

New data belong to group 1

## now using Python functions

knn = KNeighborsClassifier(n_neighbors=k, metric='euclidean')

knn.fit(data,grouplabels)

whichgroupP = knn.predict(newpoint.reshape(1,-1))

#mode, _ = stats.mode(_y[neigh_ind, k], axis=1)

print('New data belong to group ' + str(whichgroupP[0]))

New data belong to group 0.0

/Users/m0/mambaforge/lib/python3.10/site-packages/sklearn/neighbors/_classification.py:228: FutureWarning: Unlike other reduction functions (e.g. `skew`, `kurtosis`), the default behavior of `mode` typically preserves the axis it acts along. In SciPy 1.11.0, this behavior will change: the default value of `keepdims` will become False, the `axis` over which the statistic is taken will be eliminated, and the value None will no longer be accepted. Set `keepdims` to True or False to avoid this warning.

mode, _ = stats.mode(_y[neigh_ind, k], axis=1)

K-means clustering

import numpy as np

import matplotlib.pyplot as plt

import pylab

from scipy import stats

from sklearn.cluster import KMeans

from mpl_toolkits.mplot3d import Axes3D

## Create data

nPerClust = 50

# blur around centroid (std units)

blur = 1

# XY centroid locations

A = [ 1, 1 ]

B = [ -3, 1 ]

C = [ 3, 3 ]

# generate data

a = [ A[0]+np.random.randn(nPerClust)*blur , A[1]+np.random.randn(nPerClust)*blur ]

b = [ B[0]+np.random.randn(nPerClust)*blur , B[1]+np.random.randn(nPerClust)*blur ]

c = [ C[0]+np.random.randn(nPerClust)*blur , C[1]+np.random.randn(nPerClust)*blur ]

# concatanate into a list

data = np.transpose( np.concatenate((a,b,c),axis=1) )

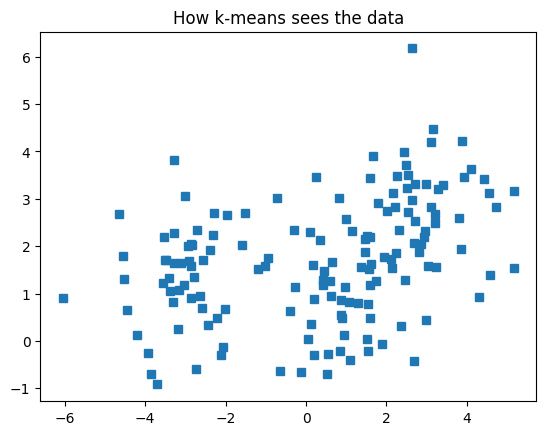

# show the data

plt.plot(data[:,0],data[:,1],'s')

plt.title('How k-means sees the data')

plt.show()

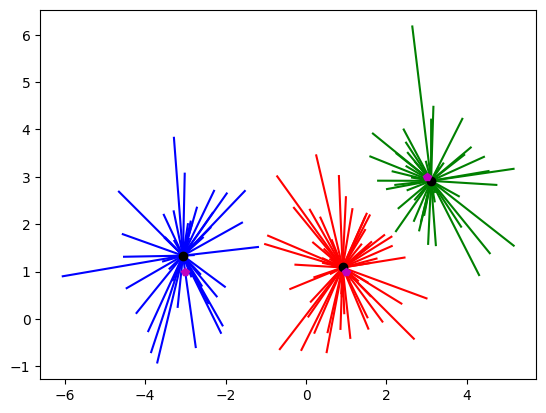

## k-means clustering

k = 3 # how many clusters?

kmeans = KMeans(n_clusters=k)

kmeans = kmeans.fit(data)

# group labels

groupidx = kmeans.predict(data)

# centroids

cents = kmeans.cluster_centers_

# draw lines from each data point to the centroids of each cluster

lineColors = 'rgbgmrkbgm';

for i in range(0,len(data)):

plt.plot([ data[i,0],cents[groupidx[i],0] ],[ data[i,1],cents[groupidx[i],1] ],lineColors[groupidx[i]])

# and now plot the centroid locations

plt.plot(cents[:,0],cents[:,1],'ko')

# finally, the "ground-truth" centers

plt.plot(A[0],A[1],'mp')

plt.plot(B[0],B[1],'mp')

plt.plot(C[0],C[1],'mp')

plt.show()

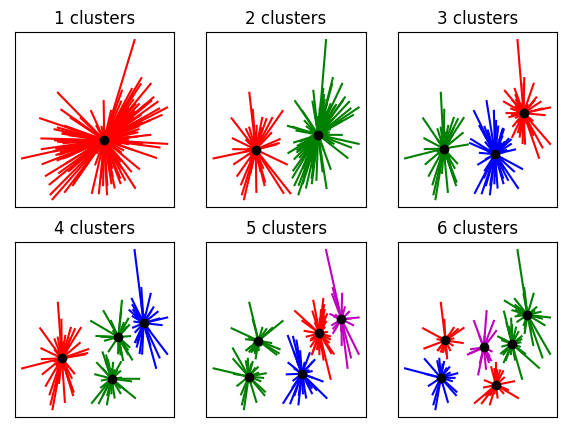

## determining the appropriate number of clusters (qualitative)

fig,ax = plt.subplots(2,3,figsize=(7,5))

ax = ax.flatten()

for k in range(6):

kmeans = KMeans(n_clusters=k+1).fit(data)

groupidx = kmeans.predict(data)

cents = kmeans.cluster_centers_

# draw lines from each data point to the centroids of each cluster

for i in range(0,len(data)):

ax[k].plot([ data[i,0],cents[groupidx[i],0] ],[ data[i,1],cents[groupidx[i],1] ],lineColors[groupidx[i]])

# and now plot the centroid locations

ax[k].plot(cents[:,0],cents[:,1],'ko')

ax[k].set_xticks([])

ax[k].set_yticks([])

ax[k].set_title('%g clusters'%(k+1))

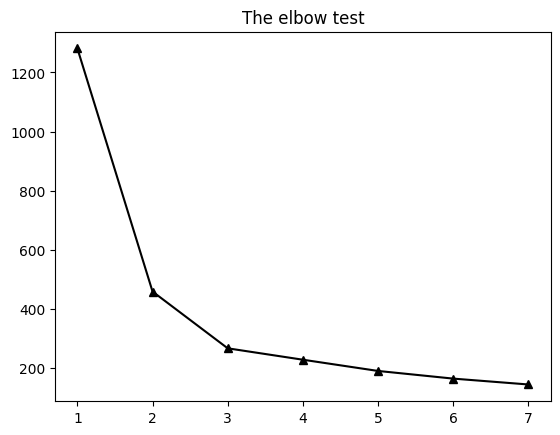

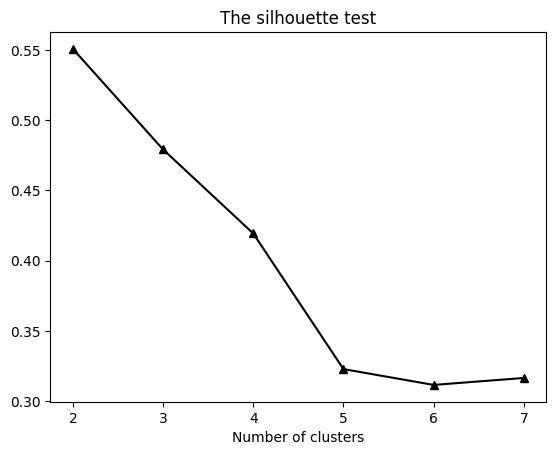

## number of clusters (quantative)

from sklearn.metrics import silhouette_samples, silhouette_score

ssds = np.zeros(7)

sils = np.zeros(7)/0

for k in range(7):

kmeans = KMeans(n_clusters=k+1).fit(data)

ssds[k] = np.mean(kmeans.inertia_)

if k>0:

s = silhouette_samples(data,kmeans.predict(data))

sils[k] = np.mean( s )

plt.plot(np.arange(1,8),ssds,'k^-',markerfacecolor='k')

plt.title('The elbow test')

plt.show()

plt.plot(np.arange(1,8),sils,'k^-',markerfacecolor='k')

plt.title('The silhouette test')

plt.xlabel('Number of clusters')

plt.show()

/var/folders/93/6sy7vf8142969b_8w873sxcc0000gn/T/ipykernel_19313/4079972229.py:6: RuntimeWarning: invalid value encountered in divide

sils = np.zeros(7)/0

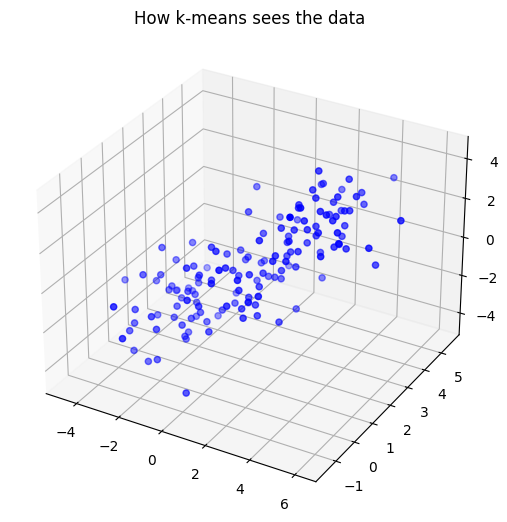

## Try again in 3D

nPerClust = 50

# blur around centroid (std units)

n = 1

# XY centroid locations

A = [ 1, 2, 0 ]

B = [ -2, 1, -2 ]

C = [ 3, 3, 2 ]

# generate data

a = [ A[0]+np.random.randn(nPerClust)*n , A[1]+np.random.randn(nPerClust)*n , A[2]+np.random.randn(nPerClust)*n ]

b = [ B[0]+np.random.randn(nPerClust)*n , B[1]+np.random.randn(nPerClust)*n , B[2]+np.random.randn(nPerClust)*n ]

c = [ C[0]+np.random.randn(nPerClust)*n , C[1]+np.random.randn(nPerClust)*n , C[2]+np.random.randn(nPerClust)*n ]

# concatanate into a list

data = np.transpose( np.concatenate((a,b,c),axis=1) )

# show the data

ax = Axes3D(plt.figure())

ax.scatter(data[:,0],data[:,1],data[:,2], c = 'b', marker='o')

plt.title('How k-means sees the data')

plt.show()

/var/folders/93/6sy7vf8142969b_8w873sxcc0000gn/T/ipykernel_19313/2150109989.py:22: MatplotlibDeprecationWarning: Axes3D(fig) adding itself to the figure is deprecated since 3.4. Pass the keyword argument auto_add_to_figure=False and use fig.add_axes(ax) to suppress this warning. The default value of auto_add_to_figure will change to False in mpl3.5 and True values will no longer work in 3.6. This is consistent with other Axes classes.

ax = Axes3D(plt.figure())

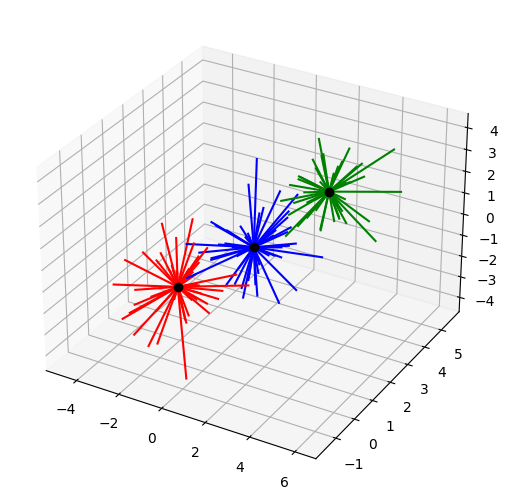

k = 3 # how many clusters?

kmeans = KMeans(n_clusters=k)

kmeans = kmeans.fit(data)

# group labels

groupidx = kmeans.predict(data)

# centroids

cents = kmeans.cluster_centers_

# draw lines from each data point to the centroids of each cluster

lineColors = 'rgbgmrkbgm';

ax = Axes3D(plt.figure())

for i in range(0,len(data)):

ax.plot([ data[i,0],cents[groupidx[i],0] ],[ data[i,1],cents[groupidx[i],1] ],[ data[i,2],cents[groupidx[i],2] ],lineColors[groupidx[i]])

# and now plot the centroid locations

ax.plot(cents[:,0],cents[:,1],cents[:,2],'ko')

plt.show()

/var/folders/93/6sy7vf8142969b_8w873sxcc0000gn/T/ipykernel_19313/3071357782.py:11: MatplotlibDeprecationWarning: Axes3D(fig) adding itself to the figure is deprecated since 3.4. Pass the keyword argument auto_add_to_figure=False and use fig.add_axes(ax) to suppress this warning. The default value of auto_add_to_figure will change to False in mpl3.5 and True values will no longer work in 3.6. This is consistent with other Axes classes.

ax = Axes3D(plt.figure())

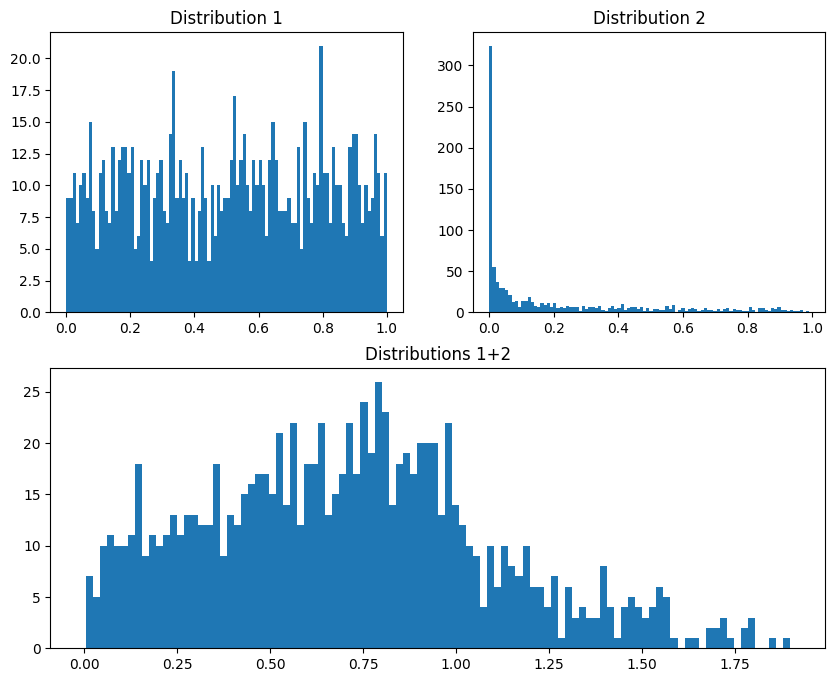

ICA

import numpy as np

import matplotlib.pyplot as plt

from sklearn.decomposition import FastICA

## Create the data

#number of data points

N = 1000

#a non-Gaussian distribution

dist1 = np.random.rand(N)

# another non-Gaussian distribution

dist2 = np.random.rand(N)**4

# setup the figure

fig = plt.figure(constrained_layout=False,figsize=(10,8))

axs = fig.add_gridspec(2,2)

# individual distributions

ax1 = fig.add_subplot(axs[0,0])

ax1.hist(dist1,100)

ax1.set_title('Distribution 1')

ax2 = fig.add_subplot(axs[0,1])

ax2.hist(dist2,100)

ax2.set_title('Distribution 2')

# and their summed histogram

ax3 = fig.add_subplot(axs[1,:])

ax3.hist(dist1+dist2,100)

ax3.set_title('Distributions 1+2')

plt.show()

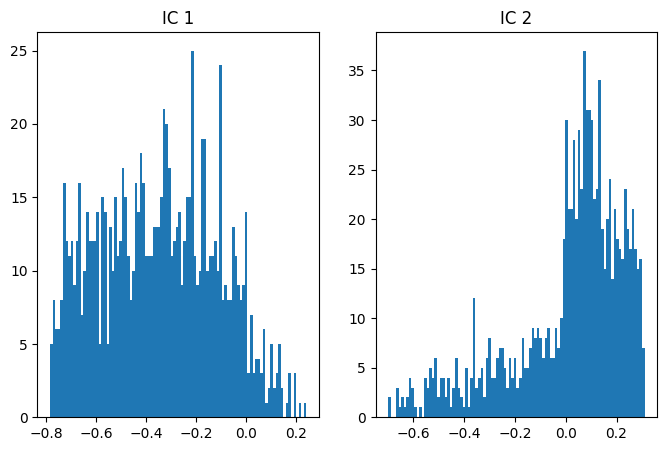

## ICA

# two non-Gaussian distributions

data = np.vstack((.4*dist1+.3*dist2, .8*dist1-.7*dist2))

# ICA and scores

fastica = FastICA(max_iter=10000,tol=.0000001)

b = fastica.fit_transform(data)

iscores = b@data

# plot distributions

# IC 1

fig,ax = plt.subplots(1,2,figsize=(8,5))

ax[0].hist(iscores[0,:],100)

ax[0].set_title('IC 1')

# IC 2

ax[1].hist(iscores[1,:],100)

ax[1].set_title('IC 2')

plt.show()

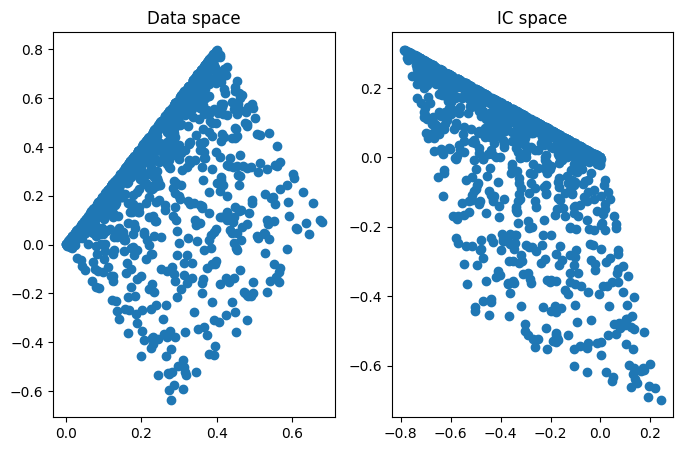

## look at the data in data space and IC space

fig,ax = plt.subplots(1,2,figsize=(8,5))

ax[0].plot(data[0,:],data[1,:],'o')

ax[0].set_title('Data space')

ax[1].plot(iscores[0,:],iscores[1,:],'o')

ax[1].set_title('IC space')

plt.show()

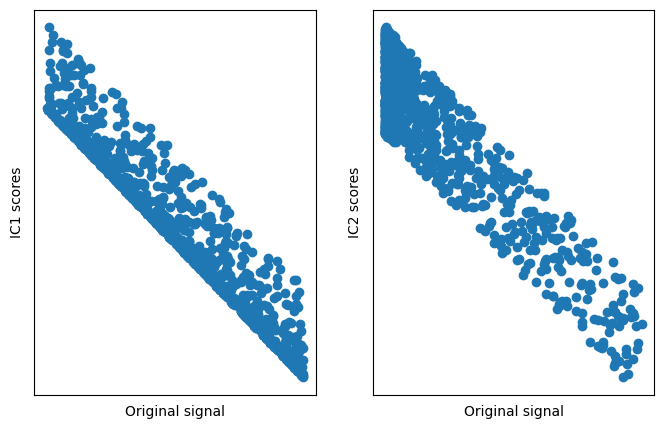

## show that the original data match the ICs

# now plot data as a function of ICs

fig,ax = plt.subplots(1,2,figsize=(8,5))

ax[0].plot(dist1,iscores[0,:],'o')

ax[0].set_xticks([])

ax[0].set_yticks([])

ax[0].set_xlabel('Original signal')

ax[0].set_ylabel('IC1 scores')

ax[1].plot(dist2,iscores[1,:],'o')

ax[1].set_xticks([])

ax[1].set_yticks([])

ax[1].set_xlabel('Original signal')

ax[1].set_ylabel('IC2 scores')

plt.show()

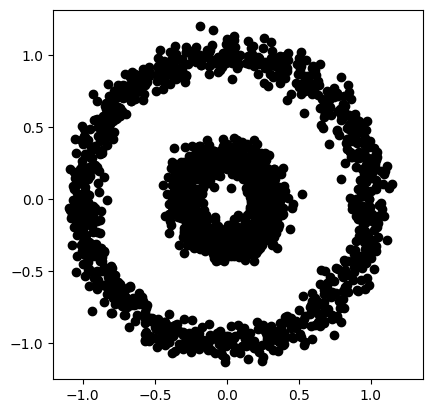

dbscan

import numpy as np

import matplotlib.pyplot as plt

from sklearn.cluster import DBSCAN

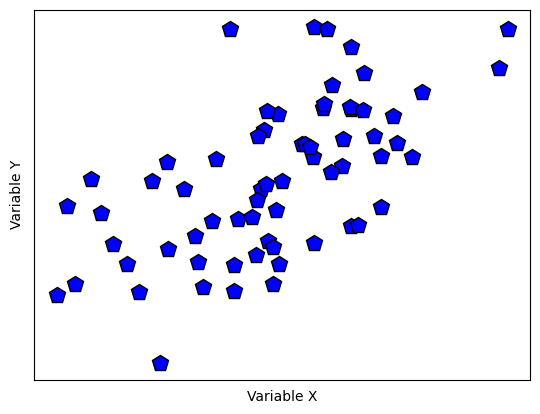

## Create data

nPerClust = 50

# blur around centroid (std units)

blur = .5

# XY centroid locations

A = [ 1, 1 ]

B = [ -3, 1 ]

C = [ 3, 3 ]

# generate data

a = [ A[0]+np.random.randn(nPerClust)*blur , A[1]+np.random.randn(nPerClust)*blur ]

b = [ B[0]+np.random.randn(nPerClust)*blur , B[1]+np.random.randn(nPerClust)*blur ]

c = [ C[0]+np.random.randn(nPerClust)*blur , C[1]+np.random.randn(nPerClust)*blur ]

# concatanate into a list

data = np.transpose( np.concatenate((a,b,c),axis=1) )

# show the data

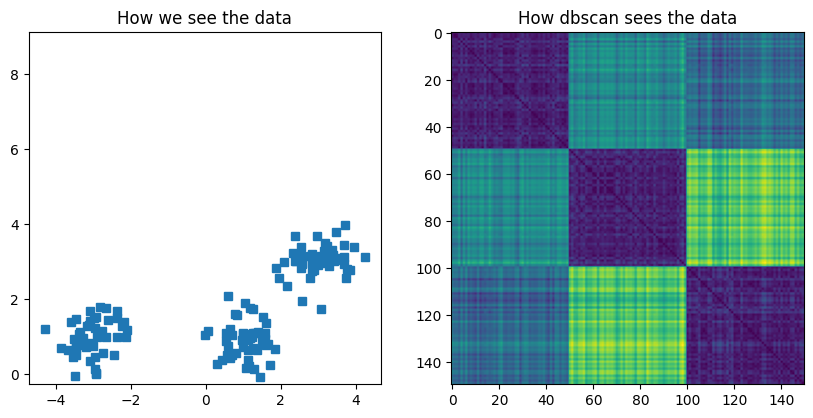

fig,ax = plt.subplots(1,2,figsize=(10,10))

ax[0].plot(data[:,0],data[:,1],'s')

ax[0].set_title('How we see the data')

ax[0].axis('square')

### distance matrix

D = np.zeros((len(data),len(data)))

for i in range(len(D)):

for j in range(len(D)):

D[i,j] = np.sqrt( (data[i,0]-data[j,0])**2 + (data[i,1]-data[j,1])**2 )

ax[1].imshow(D)

ax[1].set_title('How dbscan sees the data')

plt.show()

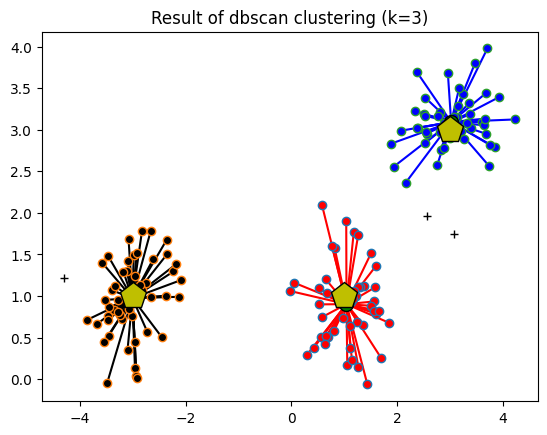

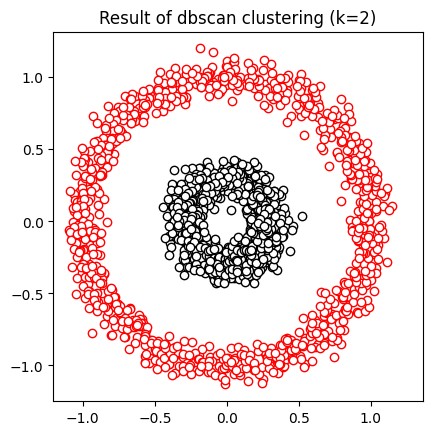

## dbscan

clustmodel = DBSCAN(eps=.6,min_samples=6).fit(data)

groupidx = clustmodel.labels_

# number of clusters

nclust = max(groupidx)+1 # +1 for indexing

# compute cluster centers

cents = np.zeros((nclust,2))

for ci in range(nclust):

cents[ci,0] = np.mean(data[groupidx==ci,0])

cents[ci,1] = np.mean(data[groupidx==ci,1])

print(cents)

# draw lines from each data point to the centroids of each cluster

lineColors = 'rkbgmrkbgmrkbgmrkbgmrkbgmrkbgmrkbgmrkbgmrkbgmrkbgmrkbgmrkbgm'

for i in range(len(data)):

if groupidx[i]==-1:

plt.plot(data[i,0],data[i,1],'k+')

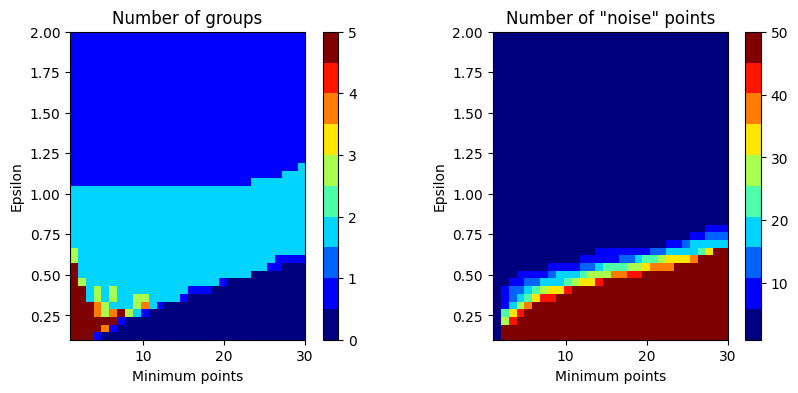

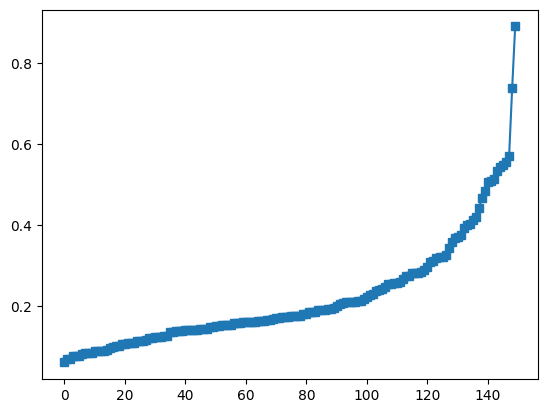

else: